3 Minutes

The competition for dominance in the artificial intelligence chip market has entered a new phase as AMD officially introduced its Instinct MI350 Series GPUs this week. Launched at the company’s Advancing AI 2025 conference, these next-generation graphics processors promise significant advances in AI performance and energy efficiency—directly challenging Nvidia’s powerful Blackwell architecture.

AI Performance Breakthroughs With the Instinct MI350 Series

AMD claims the new Instinct MI350 GPUs deliver an astounding fourfold increase in AI processing performance compared to the company’s previous generation. This leap, aimed squarely at outperforming Nvidia’s Blackwell chips, addresses the growing demands of generative AI, machine learning, and high-performance data center workloads.

On top of raw performance, AMD highlights a staggering 35x generational improvement in AI inferencing, making these accelerators highly efficient for running advanced AI models. When it comes to value, the MI350 Series provides 40% more tokens-per-dollar than direct competitors like the Nvidia B200, potentially lowering the cost barrier for organizations developing large language models and other AI solutions.

Innovative Hardware Solutions: The ‘Helios’ AI Rack

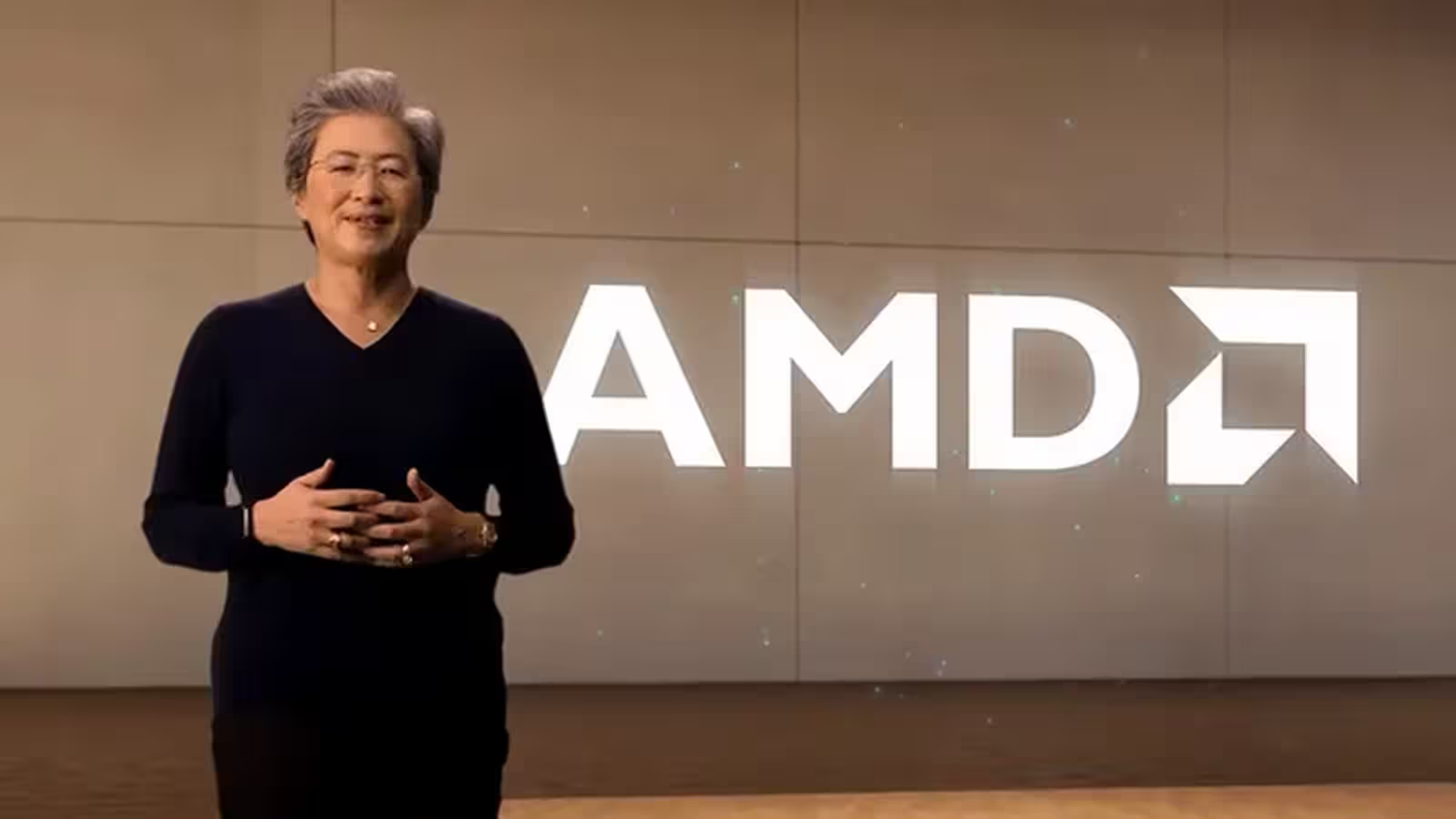

CEO Dr. Lisa Su also unveiled plans for the Helios AI Rack—a scalable rack-level solution that will integrate future Instinct MI400 GPUs, next-gen AMD EPYC Venice CPUs, and AMD Pensando Vulcano network interface cards. This system is designed to address both computational intensity and interconnect efficiency required for future AI and HPC environments.

Market Adoption and Real-World Deployments

Despite Nvidia’s longstanding leadership in AI silicon, AMD reports accelerating traction among top industry players. Its Instinct accelerators power AI research and deployment for tech giants including Meta, OpenAI, Microsoft, and xAI. MI300X GPUs, for example, are currently used for deploying Meta’s Llama 3 and Llama 4 models on platforms such as Azure, supporting both proprietary and open-source ecosystems.

Pushing Sustainability in AI Computing

Energy efficiency is a major focus for AMD’s latest hardware. The MI350 Series has already surpassed the company’s five-year goal for improving the energy efficiency of AI training and high-performance computing by achieving a remarkable 38x gain (exceeding the original target of 30x). Looking ahead, AMD aims to boost rack-scale energy efficiency by 20 times by 2030, while predicting a potential 95% cut in electricity consumption for standard AI model training.

Looking Ahead: The Future With MI400 and Open Innovation

AMD’s roadmap sets ambitious targets. The upcoming Instinct MI400 GPUs are projected to provide up to 10x better performance for advanced inference tasks, such as Mixture of Experts (MoE) models. Although Nvidia still retains a higher market capitalization—standing at $192.14 billion at the time of the announcement—AMD’s rapid technological progress signals intensifying competition.

Dr. Lisa Su summarized AMD’s vision: "AMD is accelerating AI innovation with our Instinct MI350 series, groundbreaking Helios rack-scale solutions, and expanding support for our ROCm open software stack. We believe an open, collaborative AI ecosystem is crucial for shaping the next era of artificial intelligence."

Source: techradar

Comments