3 Minutes

Meta rolls out AI-powered voice dubbing worldwide

Meta has begun a global rollout of its AI-driven voice dubbing feature for Reels. First teased by Mark Zuckerberg at Meta Connect 2024, the tool uses generative AI to translate a creator’s spoken audio into another language and can optionally add synchronized lip‑movement to match the translated track.

Key product features

Translation and voice cloning

The feature trains on your original voice and creates a translated audio track that preserves your tone and cadence. At launch, translations are available only between English and Spanish (both directions), with the company promising additional languages in future updates.

Lip‑syncing and preview controls

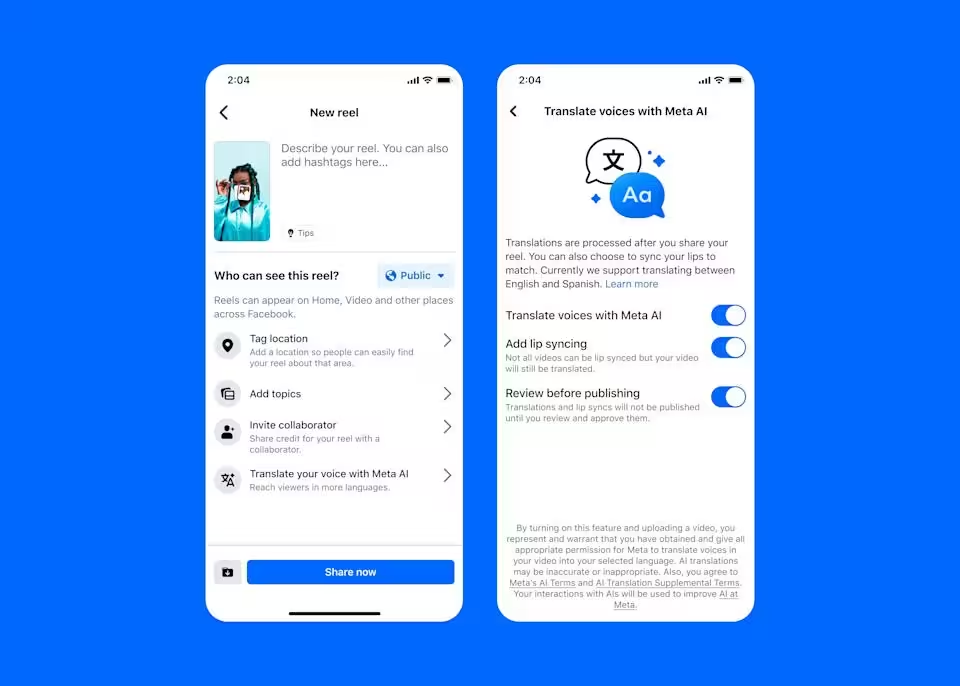

Creators can toggle lip‑syncing on or off and preview the AI‑generated translation before publishing. You can choose whether to add lip syncing and preview before posting. (Meta)

Access and creator eligibility

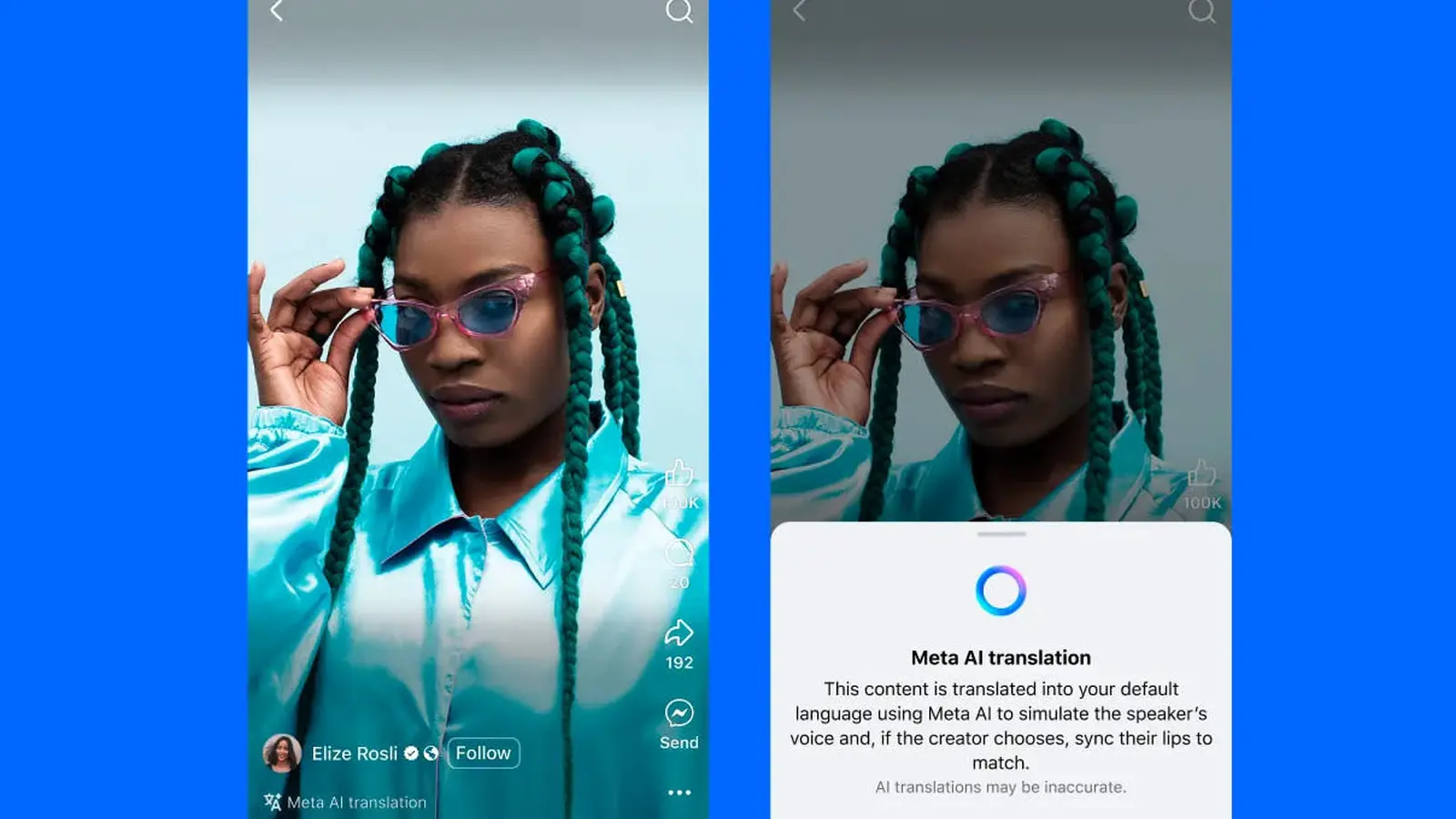

Initially, the tool is limited for posting to Facebook creators who have 1,000+ followers, though any user with a public Instagram account can use it. To apply the feature, select the "Translate your voice with Meta AI" option when publishing a Reel — that menu also exposes the lip‑sync toggle and the preview/review option. Viewers will see a clear pop‑up labeling the clip as an AI translation.

Best practices and technical guidance

Meta recommends face‑to‑camera videos for optimal results. Avoid covering your mouth and keep background music minimal. The system supports up to two speakers but performs best with non‑overlapping speech. These constraints help the generative AI maintain accuracy and naturalness in both translated audio and visual synchronization.

Why this matters — advantages and market relevance

For creators and brands, real‑time voice translation and lip‑syncing reduce friction for reaching multilingual audiences, improving content localization and discoverability. Meta also added a by‑language performance tracker so creators can monitor engagement and conversion across different translations — a valuable analytics tool for global growth and content strategy.

Comparisons and competitive landscape

Meta’s move follows similar launches by major platforms: YouTube introduced voice translation features last year, and Apple integrated live translation into Messages, Phone and FaceTime with iOS 16/17/18 (iOS 26 reference points to broader industry momentum). Meta’s differentiator is the combined focus on voice cloning, generative AI translation, and optional lip‑syncing aimed specifically at short‑form Reels content.

Use cases

- Influencers expanding reach into Spanish/English markets.

- Brands localizing campaign videos quickly without reshooting.

- Educators and micro‑publishers repurposing short lessons for new audiences.

Outlook

As Meta adds more languages and refines speaker support, the feature could become a standard tool for global content localization and social video SEO. For now, creators should test with clear audio and face‑forward shots to get the best results.

Source: engadget

Leave a Comment