3 Minutes

Samsung reportedly wins a major HBM3E order from Nvidia

A Korean report says Samsung is set to deliver 10,000 units of HBM3E 12-layer memory stacks to Nvidia for use in its AI servers and accelerators. The shipment, if confirmed, would mark a notable milestone for Samsung's memory division as it pushes deeper into the high-bandwidth memory market that feeds modern AI workloads.

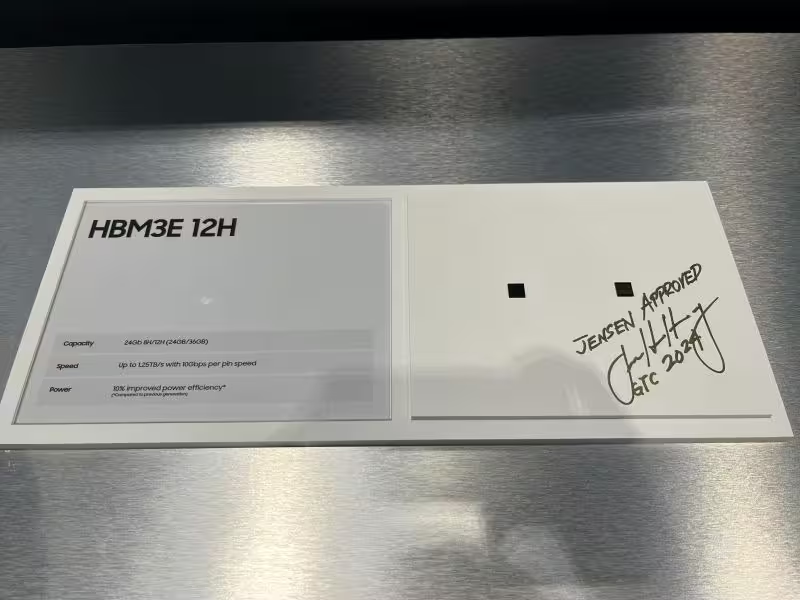

What the chips likely are

Industry observers expect these to be Samsung's HBM3E-12H modules, the company's flagship 36GB stacks unveiled last year. Those modules advertise high capacity and performance, with peak bandwidth figures around 1,280 GB/s per stack, making them suitable for large-scale AI inference and training tasks where memory bandwidth is a bottleneck.

Key facts at a glance

- Source: report from AlphaBiz in Korea

- Quantity: 10,000 HBM3E 12-layer modules

- Likely product: HBM3E-12H, 36GB per stack

- Primary customer: Nvidia, for AI accelerators and server deployments

Why this matters

AI accelerators from Nvidia and other vendors rely heavily on high-bandwidth memory to move large model weights and activations quickly between compute and memory. Only a handful of manufacturers currently produce HBM3E at volume — Samsung, SK Hynix, and Micron — so any new sourcing agreement can shift supply dynamics. Until recently, Samsung faced challenges meeting Nvidia's quality criteria, and Nvidia largely sourced HBM from alternative suppliers. Reports indicate that Samsung may have addressed those issues and secured Nvidia approval, clearing the way for commercial orders.

Market and technical context

Large tech firms and cloud providers continue to expand AI server farms, driving sustained demand for HBM3E. The memory's combination of capacity and bandwidth helps accelerate workloads like large language model training and real-time inference. A supply agreement of this size could:

- Strengthen Samsung's position in the HBM market

- Provide Nvidia additional supplier diversity for critical memory

- Potentially affect pricing and availability across the ecosystem

Outlook and implications

If the AlphaBiz figures are accurate, the 10,000-unit order would be an initial but meaningful volume rather than a full production commitment. It could be followed by larger orders as Nvidia ramps new accelerator lines or expands deployments in hyperscale datacenters. For Samsung, winning Nvidia as a buyer would represent not only revenue but also validation that its HBM3E technology meets stringent performance and reliability standards.

Ultimately, the development underscores how memory supply chains are becoming strategic assets in an AI-driven compute environment. Stakeholders from chipmakers to cloud operators will watch subsequent confirmations, shipments, and any official comments from Samsung or Nvidia to understand the broader impact.

Source: sammobile

Leave a Comment