3 Minutes

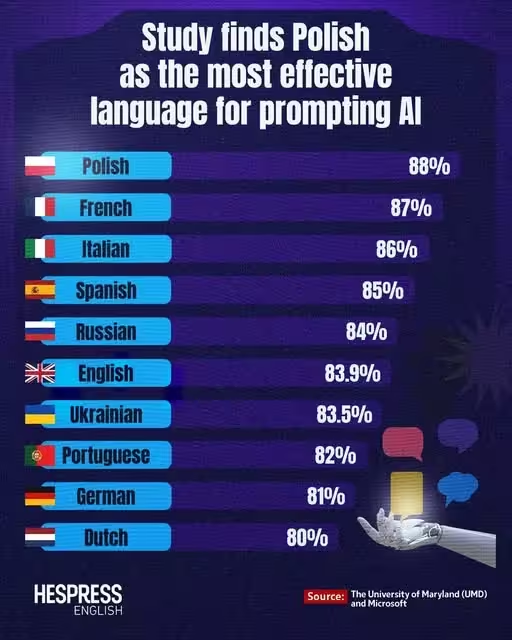

A surprise finding from a joint study by the University of Maryland and Microsoft: Polish outperformed 25 other languages as the most effective language for prompting large AI models, while English ranked only sixth.

How researchers tested language performance with AI

The research team fed identical prompts translated into 26 languages to multiple large language models — including OpenAI models, Google Gemini, Qwen, Llama, and DeepSeek — and measured task accuracy. Against expectations, Polish came out on top with an average task accuracy of 88%.

Authors of the report called the results “unexpected” and noted that English was not the universal winner. In longer-text evaluations English placed sixth, while Polish led the pack. The study highlights how language choice can materially affect model output quality.

Top languages for AI prompting — the study's leaderboard

Here are the ten best-performing languages from the study, ranked by average accuracy:

- Polish — 88%

- French — 87%

- Italian — 86%

- Spanish — 85%

- Russian — 84%

- English — 83.9%

- Ukrainian — 83.5%

- Portuguese — 82%

- German — 81%

- Dutch — 80%

Why might Polish be better for AI prompts?

Several theories could explain this counterintuitive outcome. Polish is morphologically rich and has relatively consistent spelling rules, which might yield tokens that align well with transformer tokenization schemes. That can make prompts clearer to a model even if fewer Polish training examples exist.

Another factor is ambiguity and phrasing: some languages naturally force more explicit grammatical signals, reducing the chance that a model misinterprets intent. The study also suggests that a language being “hard for humans” doesn’t mean it’s difficult for AI — models can pick up structural patterns regardless of speaker learning difficulty.

On the flip side, Chinese ranked near the bottom (fourth from last) in this evaluation, showing that large training data alone doesn't guarantee superior prompt performance across languages.

Implications for prompt engineering and multilingual AI

So what should developers, researchers, and prompt engineers take away?

- Don’t assume English is always best: test prompts in multiple languages — you might get more accurate or concise outputs in an unexpected tongue.

- Consider morphology and tokenization effects when designing multilingual benchmarks or fine-tuning datasets.

- For international deployments, evaluate model behavior in target languages rather than extrapolating from English-only tests.

The Polish Patent Office even posted on social media that the results show Polish is the most precise language for instructing AI, adding a wry note: humans may find Polish hard to learn, but AI does not share that limitation.

What’s next?

Researchers say this isn’t the final word — more work is needed to understand how tokenization, training data distribution, and linguistic structure influence model behavior. Still, the study nudges the AI community to rethink assumptions and to experiment broadly when optimizing prompts for multilingual models.

Leave a Comment