4 Minutes

Overview: A high-profile launch marred by sloppy visuals

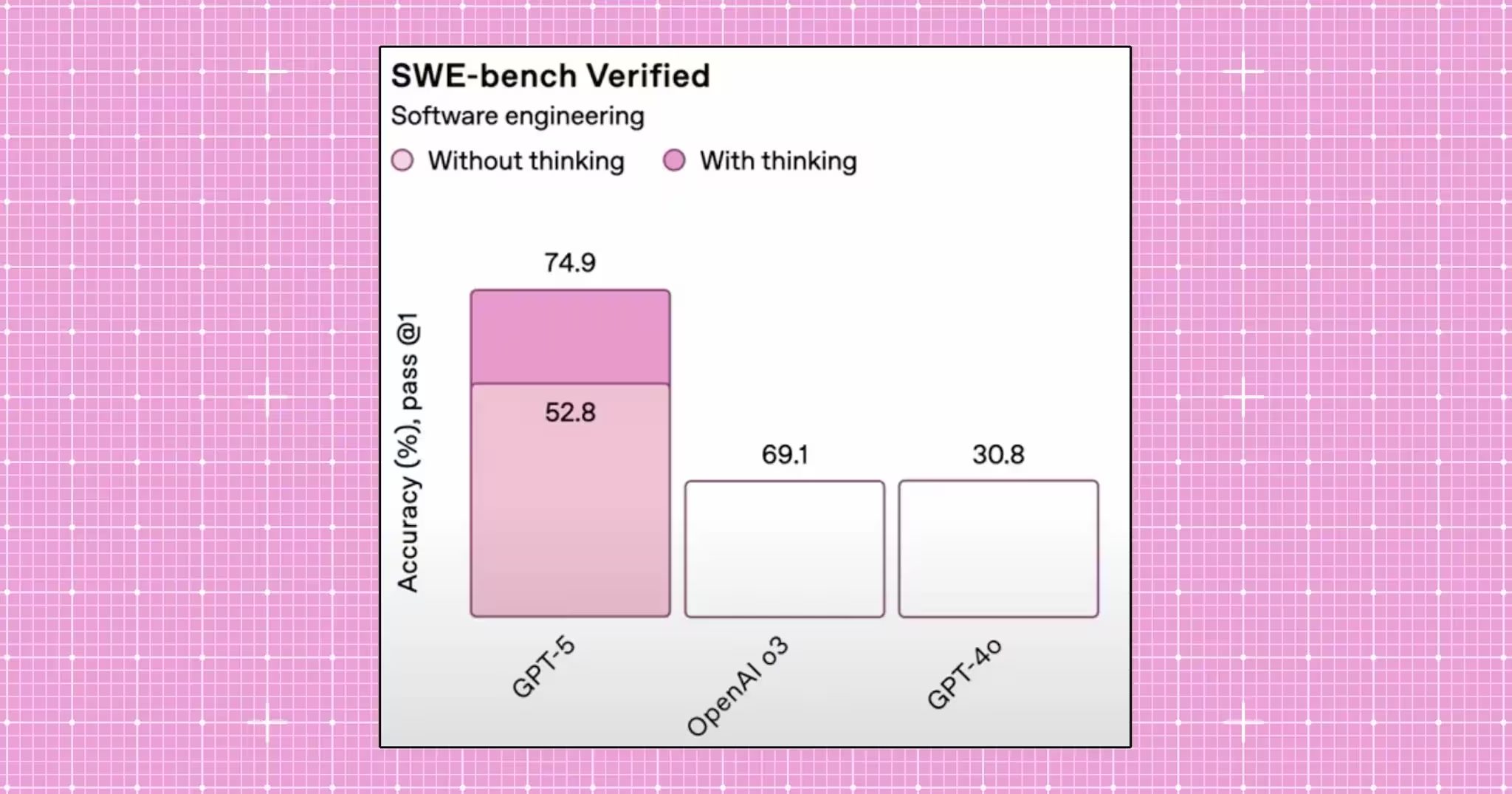

OpenAI’s GPT-5 is now live and powering ChatGPT, but the model’s launch livestream delivered an unexpectedly awkward moment: a set of performance visuals and image outputs that didn’t stand up to basic scrutiny. What was billed as a big step toward AGI instead drew attention for inaccurate benchmark charts and error-prone image generation, prompting questions about model reliability and evaluation practices.

What went wrong in the demo

The most visible issue was a bar chart comparing coding benchmark scores across generations. The chart showed GPT-5 with a 52.8% score, yet its bar appeared almost twice as tall as an older o3 model with a 69.1% score. Even weirder, a 69.1% bar was rendered the same height as a 30.8% bar for GPT-4o. Social media and tech outlets quickly flagged the inconsistency, and the clips still remain in the livestream archive despite corrections in the written blog post.

CEO response and immediate fixes

Sam Altman reacted to the viral gaffe with a light-hearted tweet acknowledging the ‘‘mega chart screwup,’’ while OpenAI updated the blog post to fix the visuals. The origin of the flawed charts—human design error vs. automated generation—has not been publicly confirmed.

Product features and capabilities

GPT-5 arrives with expected upgrades typical of next-gen large language models: larger context windows, improved multimodal handling, and refined code generation. The model is marketed to deliver better natural language understanding, image-text integration and faster inference times for production deployments. However, the demo highlighted remaining weaknesses in graphical and diagram outputs as well as persistent hallucination behavior.

Comparisons and performance evaluation

On paper, GPT-5 promises advances over GPT-4o and other predecessors, but the demo underscores how presentation and benchmarking matter. Accurate benchmark visuals, reproducible test suites, and transparent methodology are essential when comparing model performance—especially when differences can influence enterprise procurement and research adoption.

Advantages and limitations

- Advantages: stronger multimodal integration, larger context for long-form reasoning, and improved developer tooling for building AI features into applications.

- Limitations: examples show that image and diagram generation still produces nonsensical labels (maps with invented place names), and some research indicates newer reasoning models may increase hallucination risk under certain conditions.

Use cases and real-world relevance

GPT-5’s strengths potentially benefit conversational AI, code assistance, content generation, and enterprise knowledge work. Use cases include automated customer support, code review helpers, research summarization, and multimodal content creation. Yet, for regulated industries and safety-critical applications, the current rate of hallucinations and visual errors demands extended human oversight and stricter validation pipelines.

Market impact and trust implications

The misstep is more than a PR issue—trust is a critical asset for AI vendors. OpenAI operates at a valuation and scale where demonstration credibility influences enterprise deals, developer confidence, and public sentiment. The incident reignites debates about training-data quality, model alignment, and whether scale alone guarantees improved performance or if it can introduce new failure modes.

Conclusion: Lessons for AI product teams

The GPT-5 launch shows that even leading AI providers must prioritize rigorous validation, transparent benchmarks, and cautious rollout of new capabilities. For practitioners, the takeaway is clear: integrate robust evaluation, keep humans in the loop for visual and domain-sensitive outputs, and demand clearer documentation on metrics and methods when comparing large language models.

Source: futurism

Leave a Comment