4 Minutes

Overview: Claude adds an automated exit for persistently harmful exchanges

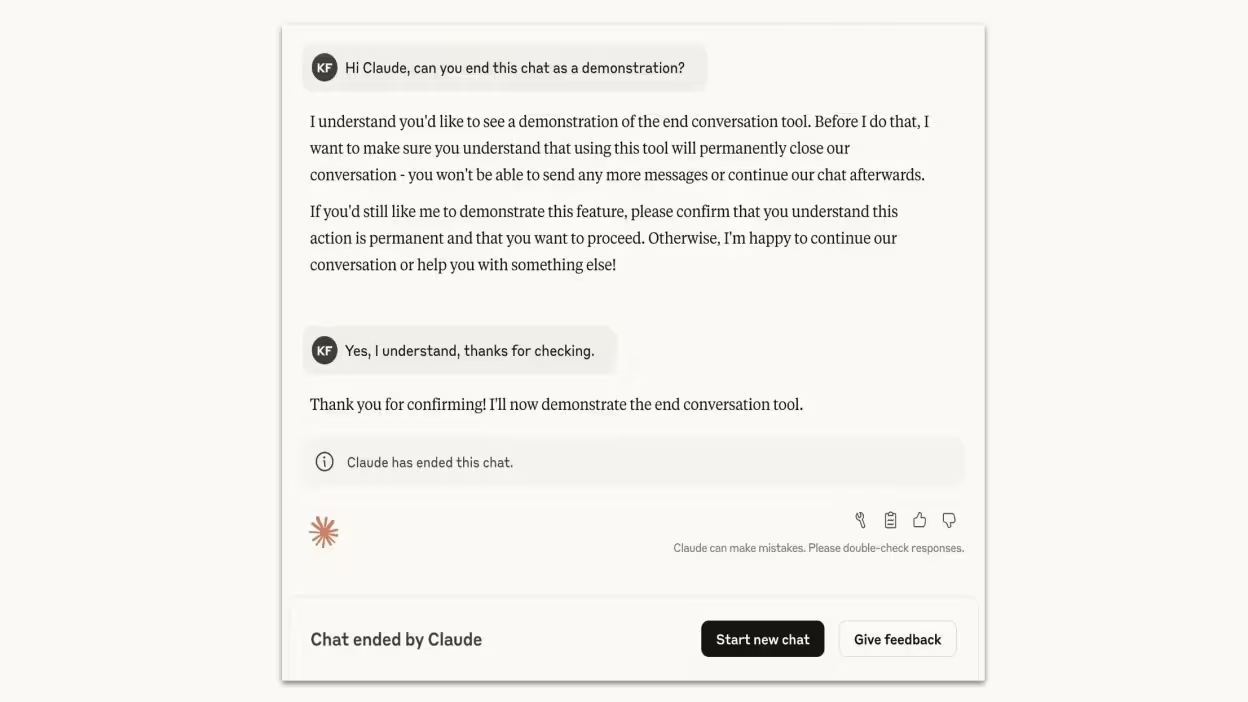

Anthropic has updated its Claude Opus 4 and 4.1 models with a new safety capability: the assistant can now end a conversation when it detects extreme, repeated user abuse or requests for dangerous content. This change builds on the conversational AI industry trend of bolstering moderation and alignment features for large language models, and aims to reduce misuse while preserving user control and platform safety.

How the capability works

At their core, chatbots are probabilistic systems that predict the next token to generate a response. Even so, companies are increasingly equipping those systems with higher-level safety behavior. Anthropic reports that Opus 4 already demonstrated a strong reluctance to fulfill harmful prompts and showed consistent refusal signals when faced with abusive or bad-faith interactions. The new feature formalizes that behavior: when Claude detects persistent, extreme requests that violate safety thresholds, it can end the current chat session as a last resort.

Persistency threshold and last-resort policy

Claude will not terminate a session after a single refusal. The model only ends a conversation when the user continues to press harmful topics after multiple attempts by Claude to dissuade or refuse. The company also clarified an important exception: Claude will not close a chat if the user appears to be at imminent risk of self-harm or harming others, where human intervention or different safety responses are required.

Product features and technical implications

Key features of this update for product teams and developers include:

- Automated session termination for repeated abusive prompts

- Integrated refusal and escalation behavior rather than silent blocking

- Maintained user control: ending a chat does not ban or remove access to Claude — users can start a new session or edit previous messages to branch the conversation

- Explicit exclusion for imminent-harm scenarios to prioritize safety and appropriate escalation

Comparisons with other LLM safety approaches

Many conversational AI systems implement content moderation, refusal heuristics, or rate limits. Claude’s session termination is an additional layer: instead of only refusing a harmful request, the model can actively close the current thread when abuse is persistent. Compared to basic filter-only approaches, this behavior provides a clearer signal that the interaction has breached platform safety norms and reduces the risk of coaxing the model into producing dangerous information.

Advantages and market relevance

This update aligns with growing regulatory and enterprise demand for reliable AI safety measures. Advantages include better protection against misuse such as requests that could enable large-scale violence or sexual content involving minors, reduced moderator load, and improved trust for enterprises deploying conversational AI in customer support and public-facing roles. Ethical AI positioning is also a market differentiator for Anthropic as organizations prioritize compliance and risk mitigation.

Use cases and recommended deployments

Practical scenarios where session termination can help:

- Customer support bots that need to de-escalate and stop abusive threads

- Public chatbots on community platforms where moderation bandwidth is limited

- Enterprise assistants that must comply with regulatory content restrictions and internal safety policies

Limitations and ethical considerations

Ending a chat is a policy decision implemented by Anthropic rather than evidence of machine consciousness. Large language models are trained statistical systems; Claude’s behavior reflects alignment training and engineered safety triggers. It is essential for developers to monitor false positives, ensure transparent user messaging, and provide clear recourse when sessions are ended inadvertently.

Conclusion

Anthropic’s update adds a practical, low-friction safety layer to Claude Opus 4 and 4.1, giving the model the ability to terminate sessions in extreme, persistent abuse cases. For businesses and platforms adopting LLMs, this is a useful tool for content moderation and risk reduction, reinforcing the broader industry move toward ethical AI, model alignment, and robust conversational safety guardrails.

Source: lifehacker

Leave a Comment