3 Minutes

Huawei reportedly developing an AI-optimized SSD to challenge HBM

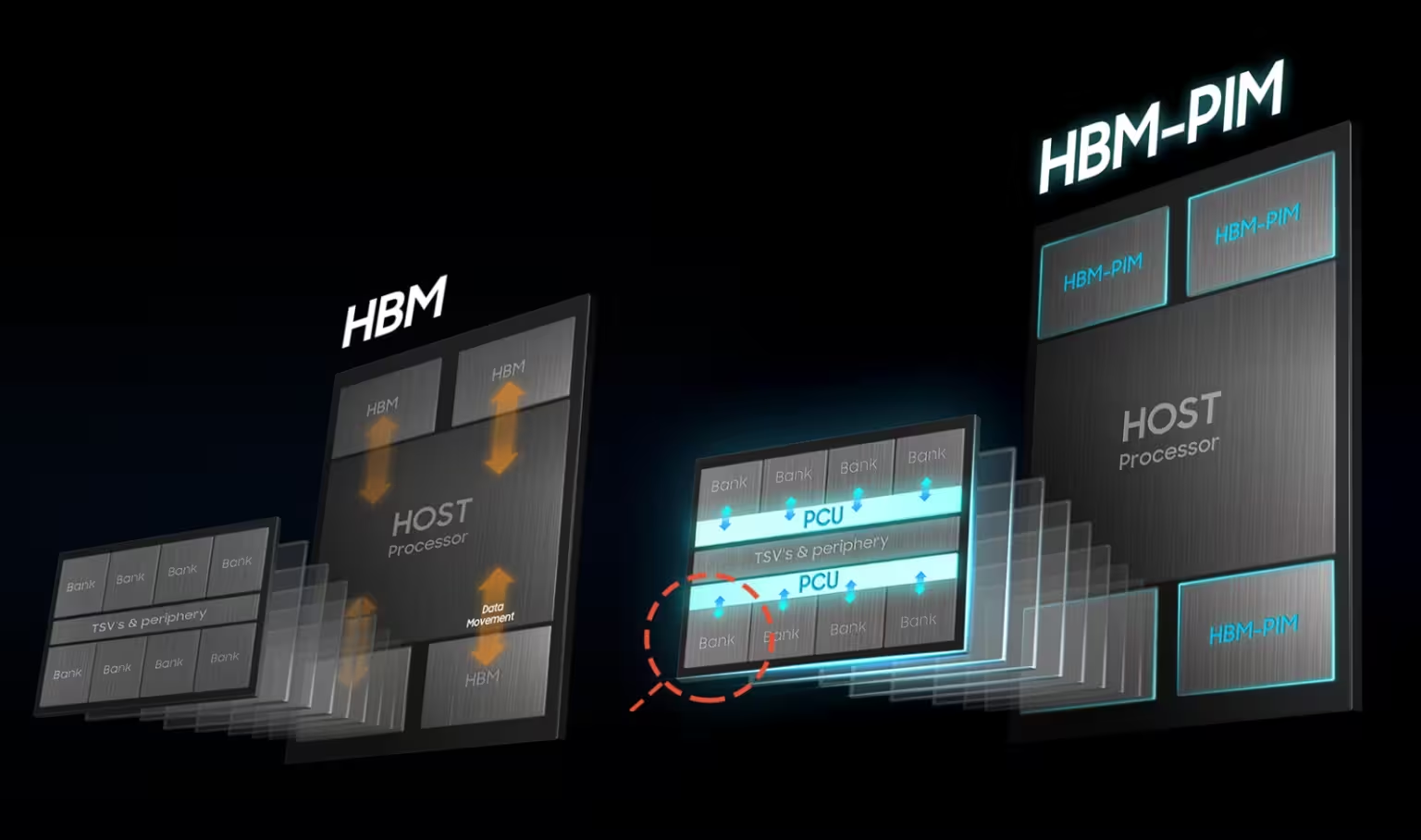

Chinese media reports claim Huawei is designing a dedicated "AI memory" — described as a solid-state drive (SSD) tuned specifically for datacenter AI workloads. The company’s alleged AI SSD is said to deliver HBM-like throughput and efficiency while removing traditional capacity limits, potentially offering a practical alternative to high-bandwidth memory (HBM) and reducing Huawei’s reliance on Western supply chains.

Product features: what the AI SSD is expected to offer

Scalable capacity without strict limits

According to the reports, the AI SSD aims to eliminate the tight capacity ceilings associated with HBM stacks, enabling much larger effective memory pools for large language model (LLM) training and inference.

Datacenter tuning and efficiency

The drive is purportedly optimized for AI-specific access patterns, prioritizing low-latency, high-throughput operations and endurance profiles suited to heavy training loads — attributes that make it more than a standard NVMe SSD.

Software side: UCM (Unified Cache Manager) and memory orchestration

Alongside hardware work, Huawei has rolled out a Unified Cache Manager (UCM) software suite designed to accelerate LLM training by pooling HBM, DRAM and SSD resources. UCM can transparently tier and cache tensors across multiple memory types, expanding usable memory for AI workloads even when high-bandwidth modules are constrained.

Comparisons: AI SSD vs HBM, DRAM and standard SSDs

HBM remains unmatched in raw bandwidth per watt and tight GPU/accelerator proximity, but is expensive and geopolitically sensitive. Standard DRAM offers lower latency than SSD but is limited by cost and capacity scaling. AI SSDs promise a middle ground: much higher capacity than HBM at lower cost, improved bandwidth for AI access patterns relative to conventional SSDs, and seamless integration via software like UCM.

Advantages, use cases and market relevance

Advantages include supply-chain resilience, greater capacity for LLMs, and potential cost savings for hyperscalers and cloud providers. Use cases span large-scale model training, distributed inferencing clusters, and datacenter memory pooling. Strategically, a viable AI SSD could help Chinese AI hardware ecosystems narrow the gap with leaders such as NVIDIA by offering alternative memory hierarchies under export restrictions.

Caveats and outlook

Details on architecture, latency statistics and real-world benchmarking remain undisclosed. Claims about removing capacity limits and matching HBM efficiency require independent validation. Still, Huawei’s combined hardware and software approach—AI SSD plus UCM—signals a pragmatic path for scaling AI memory amid geopolitical constraints.

Source: wccftech

Leave a Comment