5 Minutes

Translating thought into speech

Researchers across several US institutions have developed an inner-speech decoder — a brain-computer interface (BCI) that translates imagined words into text or audible speech. In a small clinical test involving four volunteers with severe paralysis, the system reached a peak accuracy of 74 percent for converting internal speech into audible output. Published data (Kunz et al., Cell, 2025) and commentary from Stanford neuroscientists indicate this approach moves BCIs closer to decoding thought at its source rather than relying solely on signals generated during attempted physical speech.

Scientific background and technology

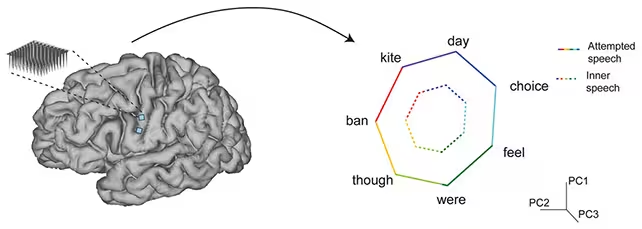

The decoder uses a neural implant to record electrical activity from the motor cortex, the brain area involved in planning and executing movements — including those required for speaking. Rather than waiting for motor commands to reach muscles, this implant detects neural patterns tied to phonemes, the basic sound units of language. Machine learning models are then trained to map those patterns to phonemes and assemble them into words and sentences.

This shift from attempted-speech BCIs to inner-speech decoding addresses a key limitation for people with locked-in syndromes or severe motor impairment: they may be unable to generate muscular attempts at speaking, but can still produce mental representations of words. As Stanford neuroscientist Benyamin Meschede-Krasa notes, 'If you just have to think about speech instead of actually trying to speak, it's potentially easier and faster for people.'

Experiment details and key results

In the reported study, four participants with profound paralysis imagined speaking specific words and phrases while implanted electrodes sampled motor cortex activity. Machine learning algorithms identified statistical relationships between neural patterns and speech units. Investigators found overlapping but distinguishable activity between attempted speech and pure inner speech; inner-speech signals appeared to be a 'smaller' version of attempted-speech patterns, according to Stanford neuroscientist Frank Willett.

Using probabilistic language models to weigh which phonemes and words typically co-occur, the system demonstrated the potential to recognize a vocabulary of up to 125,000 words from inner speech alone. Peak decoding performance reached 74 percent in some conditions, though average accuracy across trials was often lower. The study also trialed a privacy safeguard: users mentally invoked a specific 'password' to enable or disable decoding, achieving 98 percent reliability in the experimental task.

Limitations, privacy and clinical prospects

Important challenges remain. The sample size was small (four volunteers), and performance varied by participant and recording quality. Accuracy fell short of real-time conversational fluency, and the technology currently requires invasive implants and personalized machine learning calibration. There are also ethical and privacy concerns: a device that decodes inner speech could unintentionally record private thoughts. Proposed safeguards include explicit mental start/stop signals, authentication phrases, and on-device controls to prevent continuous logging.

Researchers are optimistic that advances in sensor arrays, broader cortical mapping, and improved decoding algorithms can raise accuracy and personalize models more rapidly than earlier BCI developments. Related studies earlier in the year demonstrated real-time, person-specific thought decoding, underscoring accelerating progress in neural decoding and speech BCIs.

Expert Insight

Dr. Aisha Patel, a neuroengineer and clinical BCI researcher, comments: 'This work represents an important proof of principle. The combination of high-resolution neural recordings and language-aware machine learning is promising. But to move from laboratory demonstrations to everyday clinical use we need larger trials, robust privacy protections, and interfaces that can learn and adapt to each user's unique neural signature.'

Conclusion

The new inner-speech decoder marks a significant step toward BCIs that can restore natural communication for people with severe speech and motor impairments. While peak performance levels in early tests are encouraging, broader validation, improved implant technology, and rigorous privacy safeguards are essential before thought-to-speech systems can become safe, reliable clinical tools. Continued interdisciplinary work in neuroscience, machine learning, and ethics will shape how quickly and responsibly this capability reaches patients.

Source: sciencealert

Leave a Comment