4 Minutes

iOS 26 could get a major AI boost with the Model Context Protocol

Apple appears to be preparing native support for the Model Context Protocol (MCP), according to code discovered in the first iOS 26.1 developer beta. If implemented, MCP could let third-party AI tools interact more deeply with apps across iPhone, iPad and Mac, unlocking new in-app capabilities for assistants and models outside Apple's ecosystem.

What is MCP and why it matters

MCP is a universal protocol that lets AI systems request and interact with the contextual data they need, eliminating the need for bespoke integrations for every data source. Initially proposed by Anthropic, MCP has seen uptake from major AI players including OpenAI and Google. The iOS 26.1 developer beta suggests Apple is preparing to support the protocol as well.

App Intents, Siri, and MCP

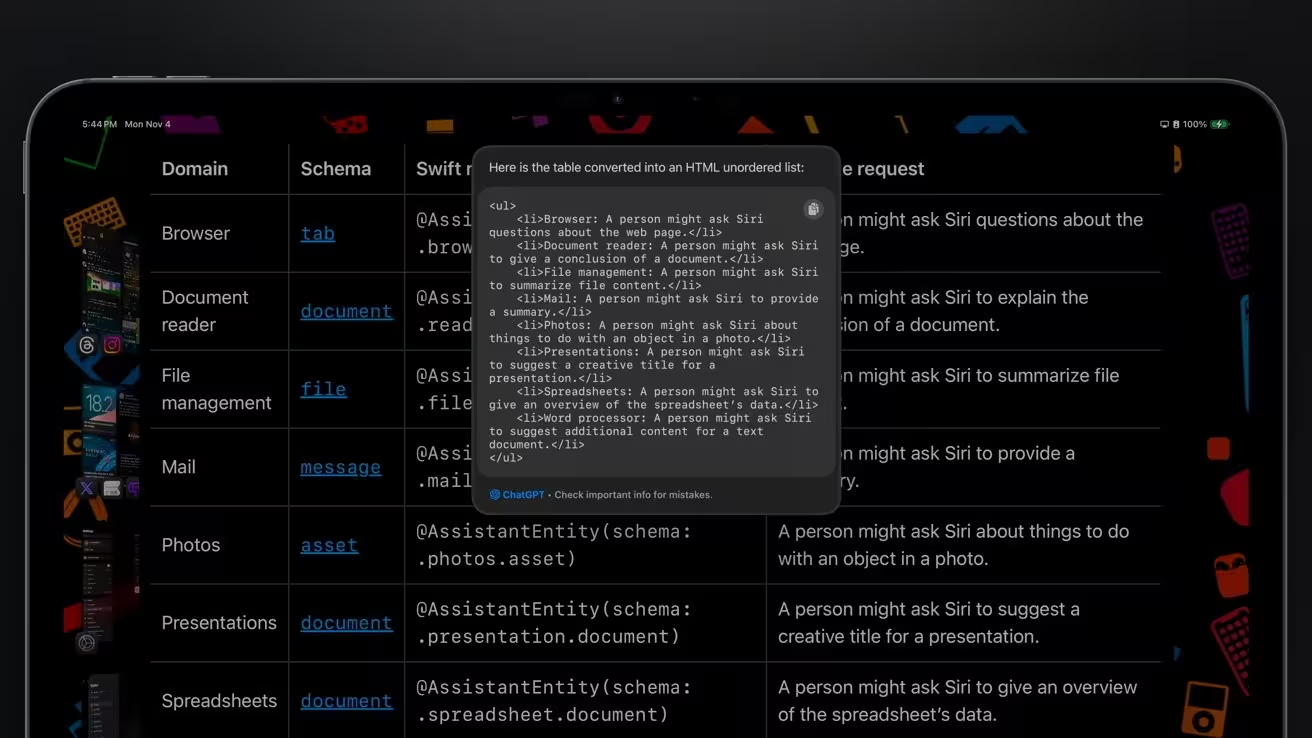

Apple’s App Intents framework already enables Siri and the Shortcuts app to trigger actions inside apps. Recent improvements to App Intents — which are tied to Apple Intelligence — allow developers to surface onscreen content to Siri for parsing. In practice, that means whatever is active on screen can be sent to an AI for analysis.

With MCP support, third-party AI services could use the same pathways to interact with applications. Siri could one day answer detailed questions about a webpage displayed on-screen, edit and share photos at the user's command, annotate a social post, or add items to an online cart without manual interaction. Likewise, users might instruct a third-party model such as OpenAI’s ChatGPT to perform those in-app actions across iPhone, iPad and Mac.

MCP may also enable assistants to gather more comprehensive information from the web. Recent reports indicate Apple plans to let its foundation models handle on-device personal-data tasks while calling out to external models for web retrieval and summarization — and MCP would standardize how contextual data is shared with those models.

Apple's plans for a revamped Siri

Rumors from September 2025 suggest Apple intends to introduce a web-search feature powered by Apple Foundation Models that can optionally enlist Google Gemini to enhance web-sourced summaries. The redesigned Siri is said to include three core components: a planner, a search operator, and a summarizer. Apple’s own model would act as the planner and search operator to safely handle personal on-device data, while an external model could assist with web retrieval and summarization.

If MCP becomes part of iOS, Google Gemini or other external models could potentially do more than return search results — they might carry out in-app operations across third-party apps. Apple previously promised advanced in-app capabilities for Siri, but that upgrade has been delayed; enabling third-party AI to perform similar tasks could serve as an interim solution and give users more choice.

At the same time, MCP could let Siri independently pull and use web information without relying solely on external providers. Because MCP standardizes access to contextual data across sources, both Apple’s models and third-party assistants could retrieve and act on relevant information more easily.

Today, Siri can perform a privacy-preserving web search when needed, and more complex tasks are handed off to services like ChatGPT with user consent. Apple’s apparent strategy is to collaborate with AI companies rather than build a single closed solution — an approach that may accelerate development while offering users multiple AI options.

The revamped Siri is expected to arrive in early 2026, likely as part of an iOS 26.x update.

Source: appleinsider

Leave a Comment