3 Minutes

OpenAI has launched GPT-5-Codex-Mini, a compact, budget-friendly variant of its GPT-5-powered Codex coding model designed to give developers more affordable access to advanced code generation and software engineering assistance.

What the new mini model offers

GPT-5-Codex-Mini is a smaller, cost-optimized sibling of GPT-5-Codex. It aims to deliver most of the core coding capabilities—like generating new projects, adding features and tests, and large-scale refactors—while reducing compute costs so teams can run far more tasks for the same budget. In practice, OpenAI says the Mini enables roughly four times the usage compared with the full GPT-5-Codex, trading only a modest dip in accuracy and reasoning.

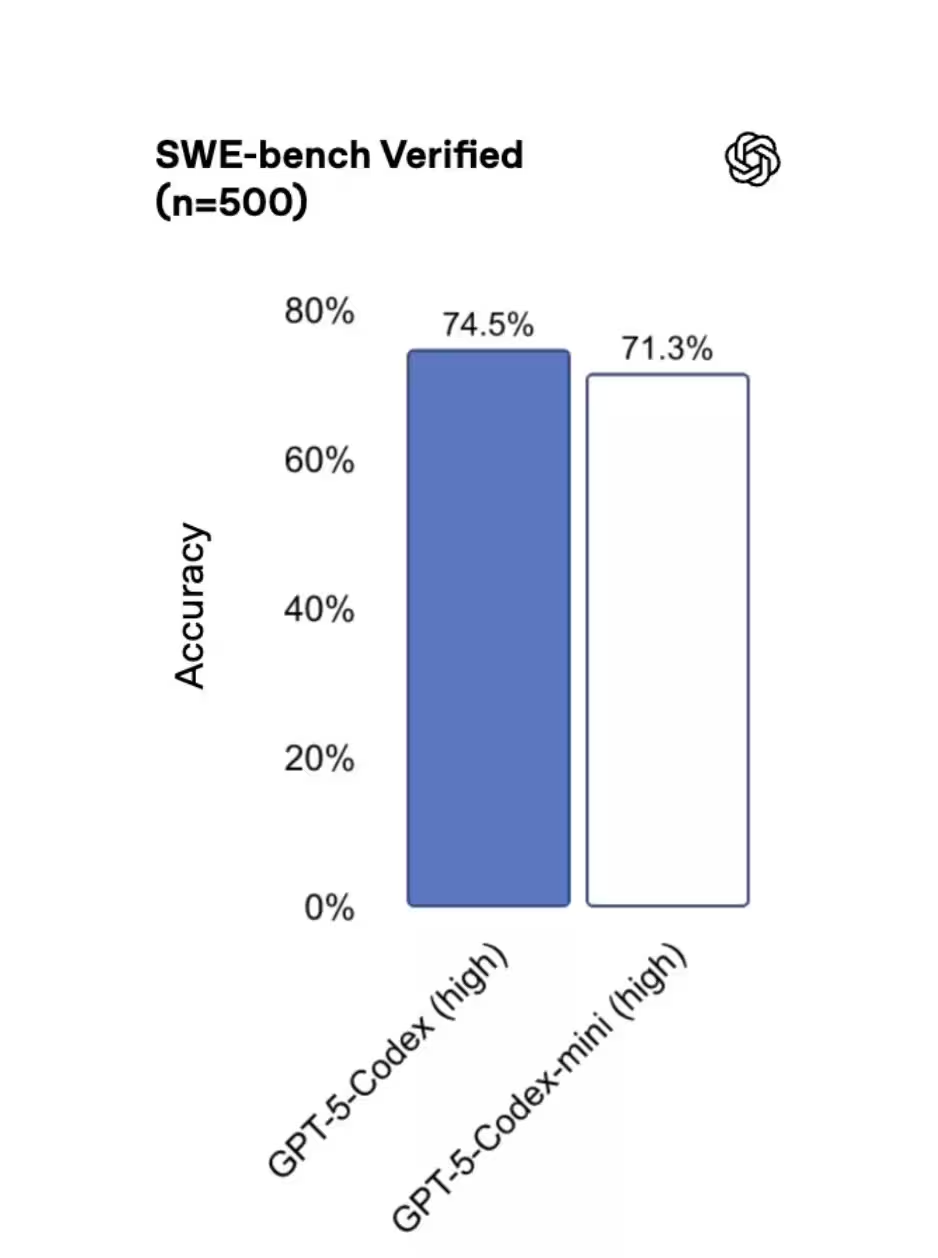

How it performs: real benchmark numbers

Benchmarks on SWE-bench Verified show the three models stack up closely: GPT-5 High scored 72.8%, GPT-5-Codex scored 74.5%, and GPT-5-Codex-Mini landed at 71.3%. Those results suggest the Mini retains much of the original model’s strengths while delivering meaningful cost savings—an attractive trade-off for many production workflows.

When developers should pick the Mini

So when is the Mini the right call? OpenAI recommends using GPT-5-Codex-Mini for lighter engineering tasks or as a fallback when you approach the usage limits of the primary model. The Codex tooling even suggests switching to the Mini once you hit about 90% of your quota. Think of it as a throttle: keep high-stakes jobs on the full model, and move routine or high-volume work to the Mini to stretch budget and throughput.

Where you can use it today

The Mini is already available in the Command Line Interface (CLI) and as an IDE plugin, with API support coming soon. That means developers can integrate it into local workflows, CI pipelines, and editor-driven coding sessions right away—before broader API rollout completes.

Behind the scenes: performance and reliability upgrades

OpenAI also announced infrastructure improvements to make Codex usage more predictable. GPU efficiency work and routing optimizations have allowed OpenAI to raise rate limits by 50% for ChatGPT Plus, Business, and Education subscribers. Pro and Enterprise customers receive priority processing to preserve peak speed and responsiveness. Earlier issues caused by caching errors that reduced usable capacity have been addressed, too, so developers should see a steadier, more reliable experience throughout the day.

Bottom line: GPT-5-Codex-Mini gives teams a practical way to scale coding automation without a proportional rise in cost. For many developers, it will be the smarter choice for high-volume or lower-risk tasks—especially while OpenAI continues to expand API access and tighten performance guarantees.

Leave a Comment