5 Minutes

Google's new Ironwood TPU family has reignited a simmering battle in AI hardware: this time the real challenger to Nvidia isn't AMD or Intel, but Google's own custom silicon optimized for inference. With jaw-dropping memory capacity, dense interconnects and aggressive efficiency claims, Ironwood is reshaping what cloud AI looks like at scale.

Ironwood by the numbers: memory, compute and a SuperPod that scales

At its core, Ironwood (TPU v7) is designed for one thing — serving models in production. Google is pitching it as an "inference-first" chip with specs built to reduce latency, cut power per query and simplify deployment for large language models and other real-time AI services.

- Peak FP8 compute per chip: ~4,614 TFLOPs

- On-package memory: 192 GB HBM3e (roughly 7–7.4 TB/s bandwidth)

- Pod scale: up to 9,216 chips per SuperPod

- Aggregate compute per pod: ≈42.5 exaFLOPS (FP8)

- System HBM per pod: ~1.77 PB

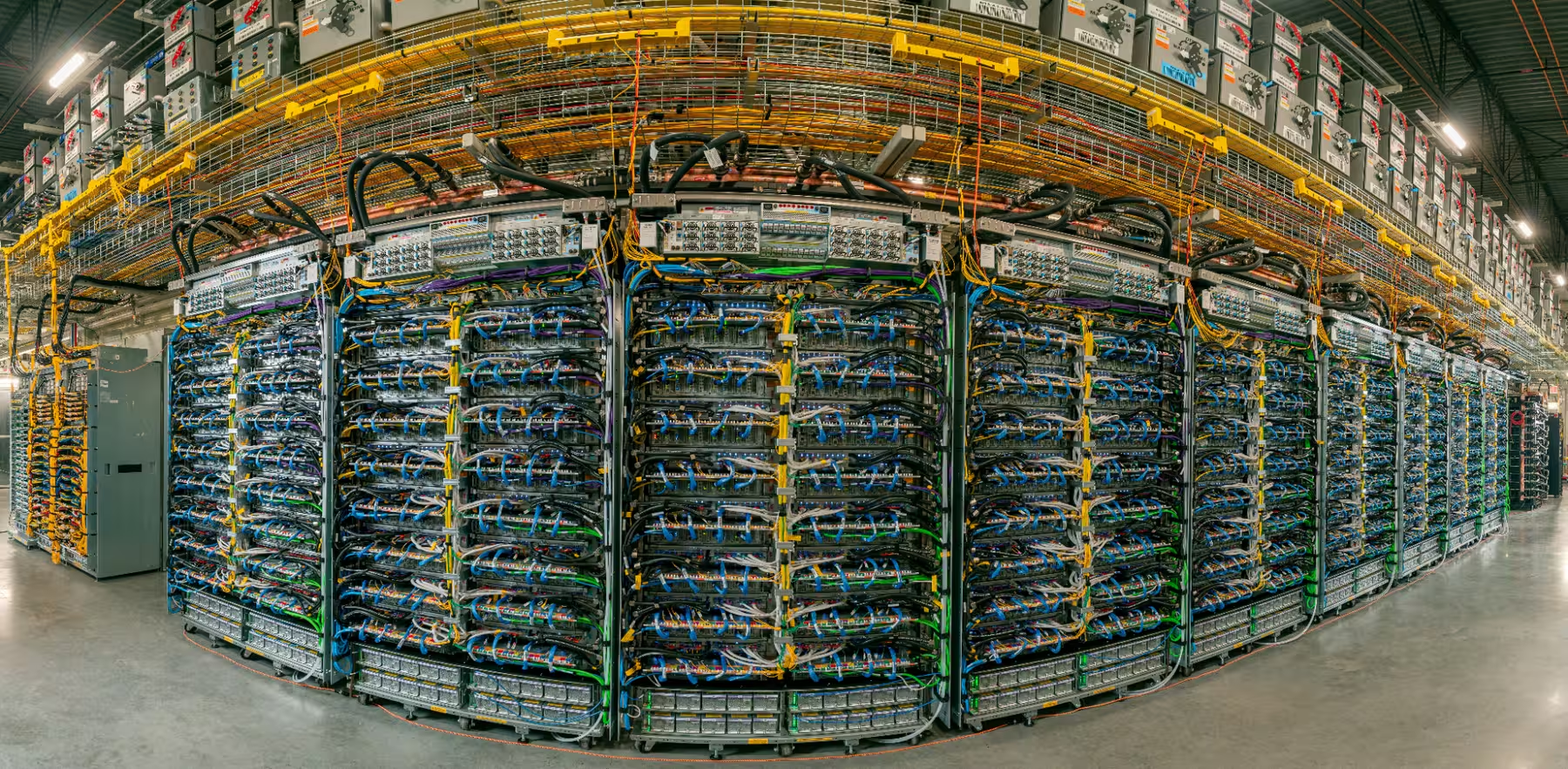

Those raw figures matter, but the story is as much about how chips talk to each other. Google uses an InterChip Interconnect (ICI) and a 3D torus layout to knit many chips into a cohesive SuperPod, relying on a scale-up fabric and a 1.8 PB inter-pod network to keep large models resident on fast memory instead of shuttling weights over slower links.

Why inference flips the competitive map

Training used to be the battleground: raw TFLOPs, huge memory pools and optimized kernels were the metrics that mattered most, and Nvidia GPUs dominated that space. Now, the AI economy is shifting. Once models are trained, billions of inference queries — not training runs — become the real workload. That puts a premium on latency, query throughput, energy per query and cost-efficiency.

.avif)

Ironwood is built around those metrics. Big on-package memory reduces cross-chip chatter for huge models, lowering latency. Google says Ironwood delivers significantly better generational performance and power efficiency (the company claims roughly 2× power-efficiency gains versus prior TPU generations). For hyperscalers and cloud customers who pay for 24/7 inference capacity, that efficiency can translate into major cost savings.

Interconnects, SuperPods and ecosystem lock-in

Another competitive edge is integration. By offering Ironwood through Google Cloud, Google can optimize the whole stack — hardware, networking, and runtime — to drive down cost-per-query. Its SuperPod approach, with dense interconnect and a scale-up fabric, is designed to serve very large models with fewer performance penalties than a more fragmented GPU cluster would face.

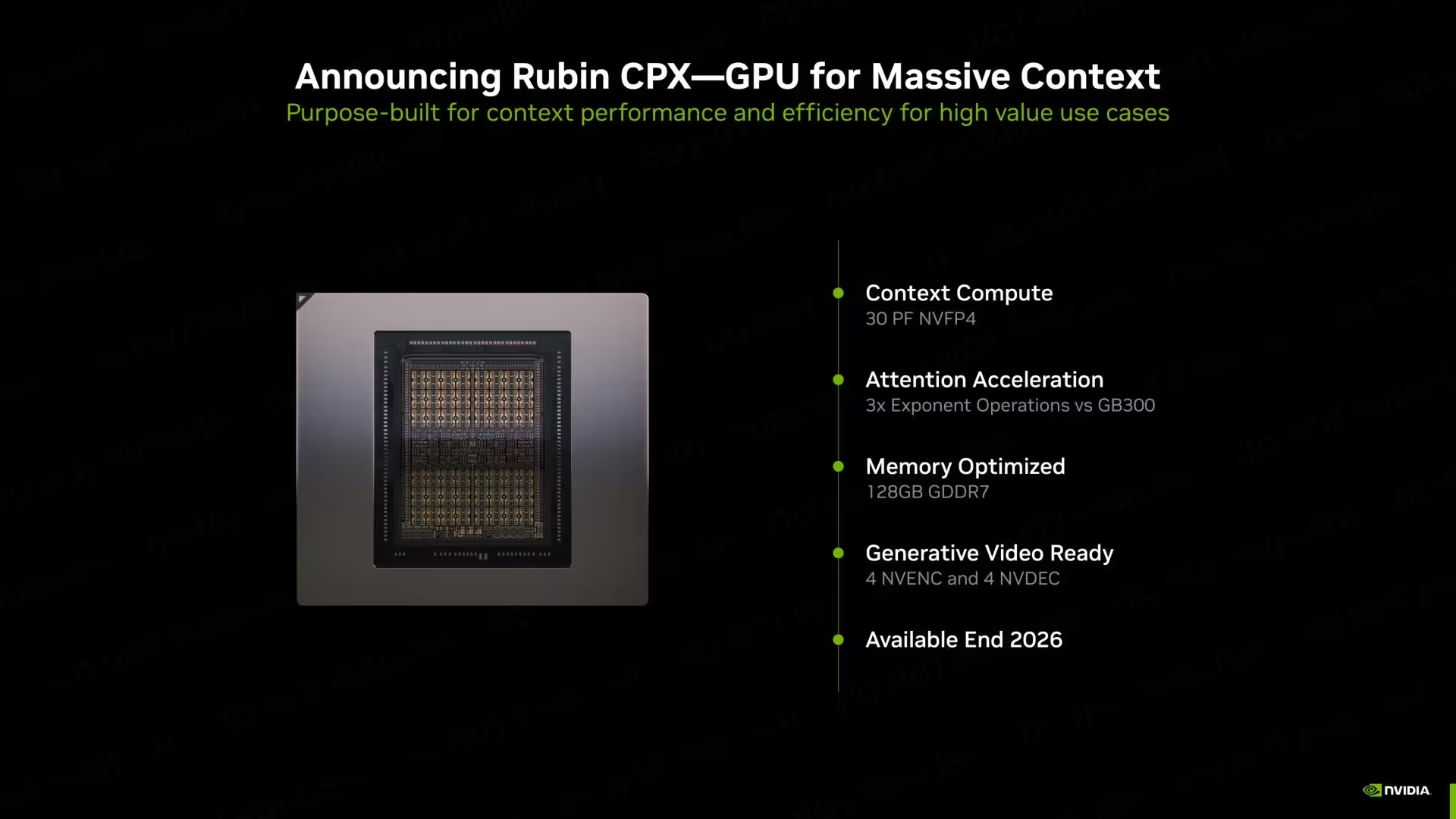

That vertical integration raises strategic risks for Nvidia. Even with Nvidia's Rubín racks and the B200 Blackwell GPUs targeting inference, cloud customers might prefer native TPU infrastructure if it measurably lowers latency and operating costs. The result could be stronger lock-in to a particular cloud provider's hardware architecture.

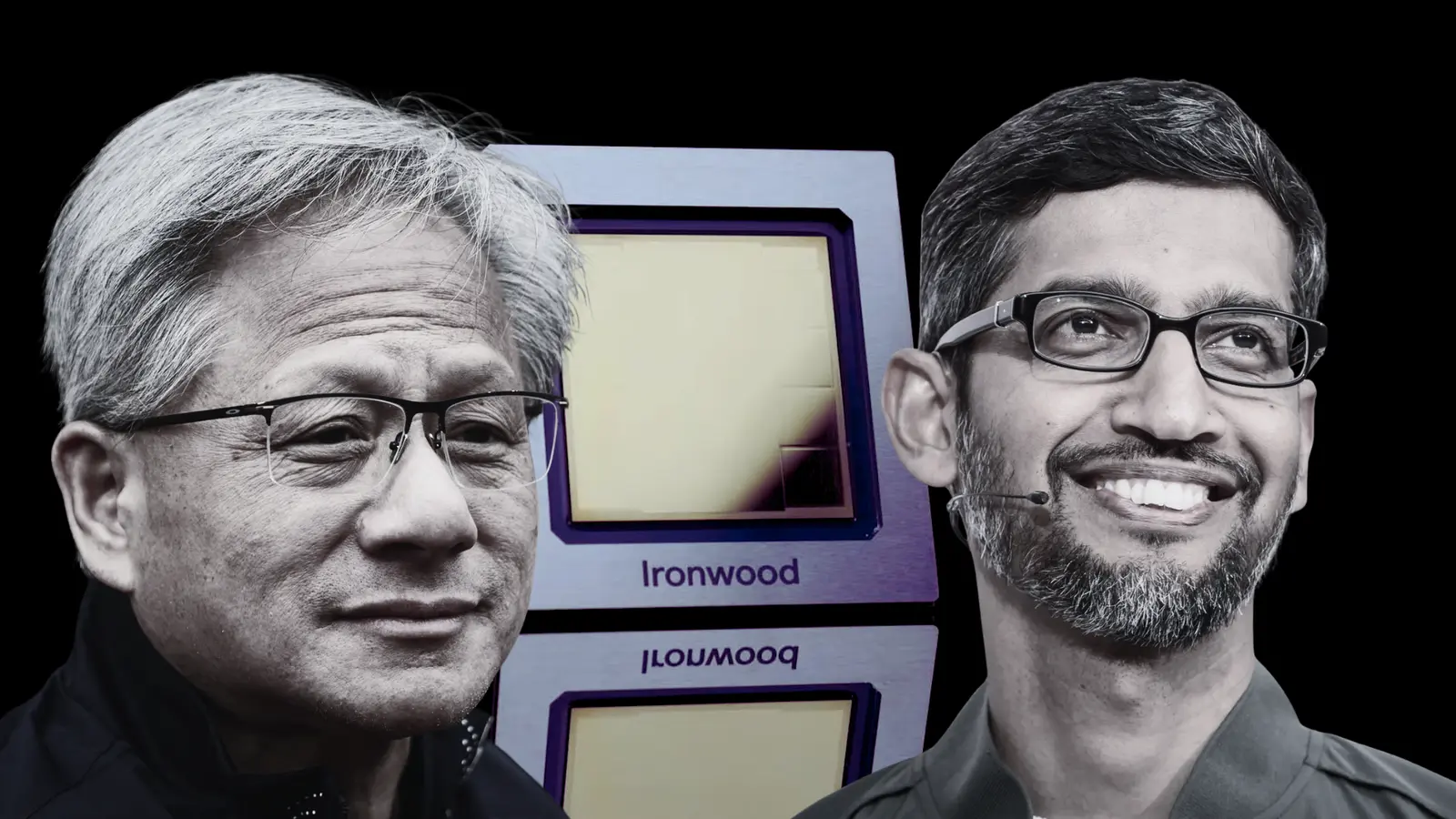

Jensen Huang has noticed

Nvidia's CEO has publicly acknowledged how hard building custom ASICs is and has called out TPUs as a meaningful competitor. That recognition matters: when dominant incumbents publicly identify a rival technology as a threat, it typically signals focused investment and faster product cycles on both sides.

So, is Nvidia doomed?

Not at all — but the rules are changing. Nvidia still leads in versatile GPU compute, a massive software ecosystem and broad market adoption for training and many inference scenarios. What Ironwood does is open a new axis of competition centered on inference economics. For companies running massive real-time deployments, Google’s TPU strategy could become the deciding factor.

In short: the AI contest is evolving from "who has the most flops" to "who serves the most queries, cheapest and fastest." With Ironwood stepping into production, expect cloud providers, hyperscalers and enterprises to reassess where they run inference workloads — and that makes Google the most interesting challenger to watch right now.

Source: wccftech

Comments

mechbit

If Google actually cuts power per query and keeps massive models in HBM, cloud inference costs could drop big. Nvidia still has the ecosystem tho, so not dead yet

DaNix

All these on-paper TFLOPs and 192GB HBM sound insane, but is it real across workloads? latency wins only if software + availability match. cloud lock-in worries me…

Leave a Comment