3 Minutes

At CES, Razer teased Project Motoko — an "AI-native" headset concept that literally sees through your eyes. With integrated cameras, smart mics and built-in AI links, Motoko sketches out a future where headsets blend gaming, productivity and everyday life.

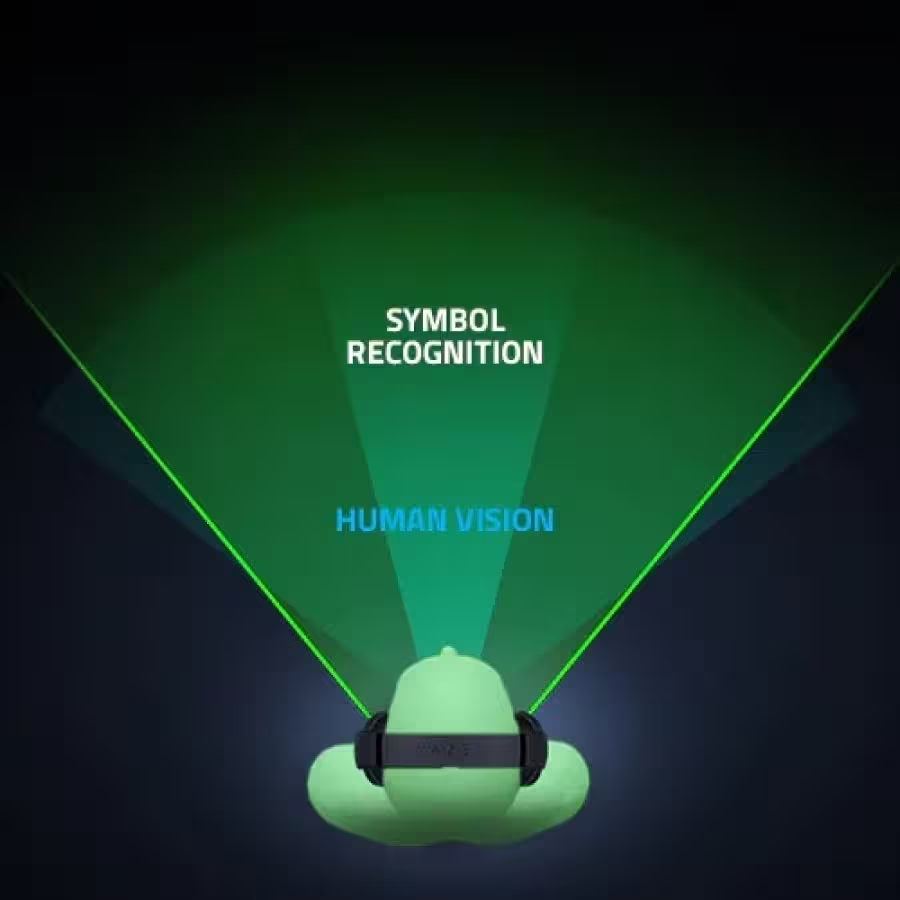

Eye-level cameras that read the world

Motoko ships with dual first-person-view cameras mounted at eye level, designed to capture what the wearer sees in real time. That positioning isn't just for immersion: it enables instant object and text recognition. Imagine walking down a street and the headset translating a foreign sign as you glance at it, or scanning a printed document and presenting a concise summary in seconds.

Razer gave concrete examples: translating street signs, tracking gym reps for fitness coaching, or summarizing documents on the fly. Those are the kinds of practical integrations that turn raw computer vision into everyday utility.

Sound that knows the difference

On the audio side, Motoko combines far-field and near-field microphones to capture both ambient dialogue and close-range voice commands. Together they let the headset interpret context — whether you want to shout a quick request, or have the device pick up and summarize a conversation that appears in your field of view.

|  |

Razer describes the headset as a full-time AI assistant that adapts to schedules, preferences and habits — reacting instantly to prompts and learning over time. It’s an ambitious pitch: more than a gaming peripheral, Motoko is framed as a wearable that augments daily tasks.

Plugging into multiple AI ecosystems

One of the more eyebrow-raising details is Motoko's promised compatibility with Grok, ChatGPT and Gemini. Razer says the headset "connects effortlessly" with those models, hinting at a multi-AI strategy that would let users leverage different back-end assistants depending on use case.

That raises questions about data routing, latency and which services handle vision, speech and reasoning tasks. Razer hasn’t provided technical specifics yet — likely because Motoko is pitched as a concept rather than a shipping product.

What this concept means for wearables

Project Motoko is less a product announcement and more a preview of where AI-driven wearables might head. It combines on-device sensors, cloud AI and conversational assistants into a single platform. For gamers, that might mean smarter in-game overlays; for professionals, live document summaries; for fitness fans, automated rep counting and form feedback.

- Key features: eye-level dual cameras, dual mic arrays, instant object/text recognition.

- Potential uses: translation, productivity summaries, fitness tracking, augmented gaming.

- Open questions: privacy controls, on-device vs. cloud processing, battery life and availability.

Razer’s Motoko offers an intriguing glimpse of AI-native headsets — a wearable that sees, listens and responds. Whether that vision becomes a commercial reality remains to be seen, but the concept points to a near future where headsets do more than play sound: they actively interpret and augment the world around you.

Source: gsmarena

Leave a Comment