3 Minutes

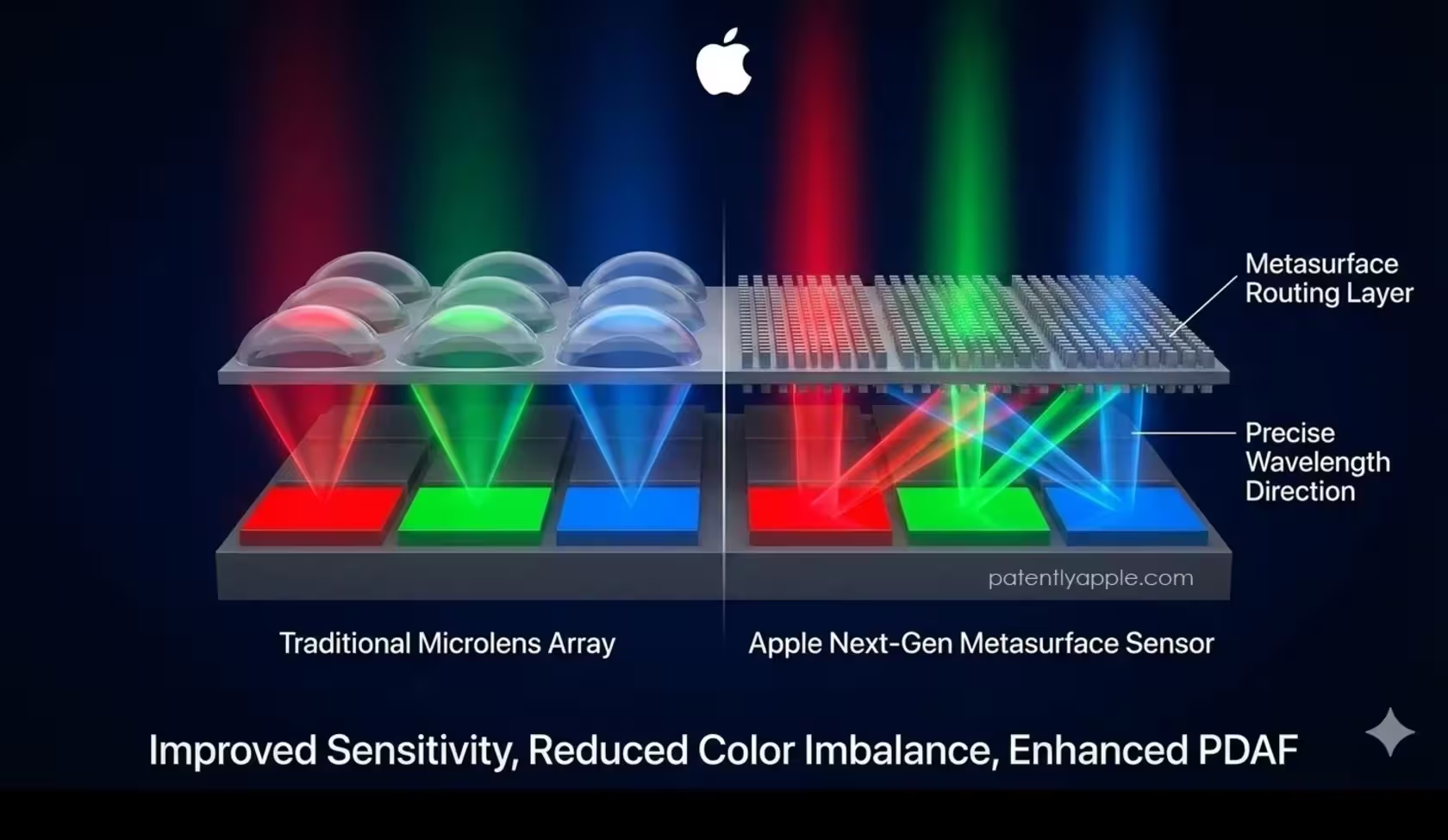

You might soon carry a camera that bends light instead of relying on stacks of tiny glass lenses. Apple is quietly exploring a redesign of image sensors that swaps conventional microlenses for an engineered optical layer called a metasurface.

Picture the sensor as a three-tier sandwich: the pixel layer at the bottom, a color filter in the middle, and a wafer-thin metasurface on top. That metasurface isn’t a lens in the old sense; it’s a tapestry of nanostructures that steer different wavelengths to specific pixels with surgical precision. The result is selective routing of red, green and blue light even when their paths overlap.

So why does that matter? Because it lets Apple tune sensitivity per color. Red and blue pixels—typically starved for photons—can be given more of the metasurface’s real estate to gather light, while green pixels can remain smaller and crisper. The design balances sensitivity and detail in a way that microlenses simply can’t.

This approach promises brighter low-light photos, truer color balance and faster phase-detection autofocus without fatter camera bumps.

Phase-detection autofocus can be folded directly into the metasurface layer, which means focus becomes faster and more reliable without sacrificing pixel density or increasing module thickness. In plain terms: better autofocus, especially in dim scenes, without the usual hardware trade-offs.

Beyond image quality, metasurfaces open doors for sleeker multi-camera layouts and thinner devices. That versatility matters across Apple’s lineup—from iPhones and iPads to Macs and even wearables—because the company can push imaging performance up while keeping products slim and wearable-friendly.

There are practical benefits too. With better native color separation at the sensor level, images need less heavy-handed software correction. Less computational fixing. More faithful captures. And that’s a meaningful shift; it changes where the magic happens—from software band-aids back into optics.

It’s early days, but Apple’s move toward metasurface sensors signals a desire to overcome the physical limits of today’s mobile cameras. If the company pulls this off, the next generation of Apple cameras could feel like a fresh rulebook for smartphone photography. Keep an eye on the hardware—because the way light is directed matters more than ever.

Leave a Comment