5 Minutes

Background: From Physical Models to Massive Datasets

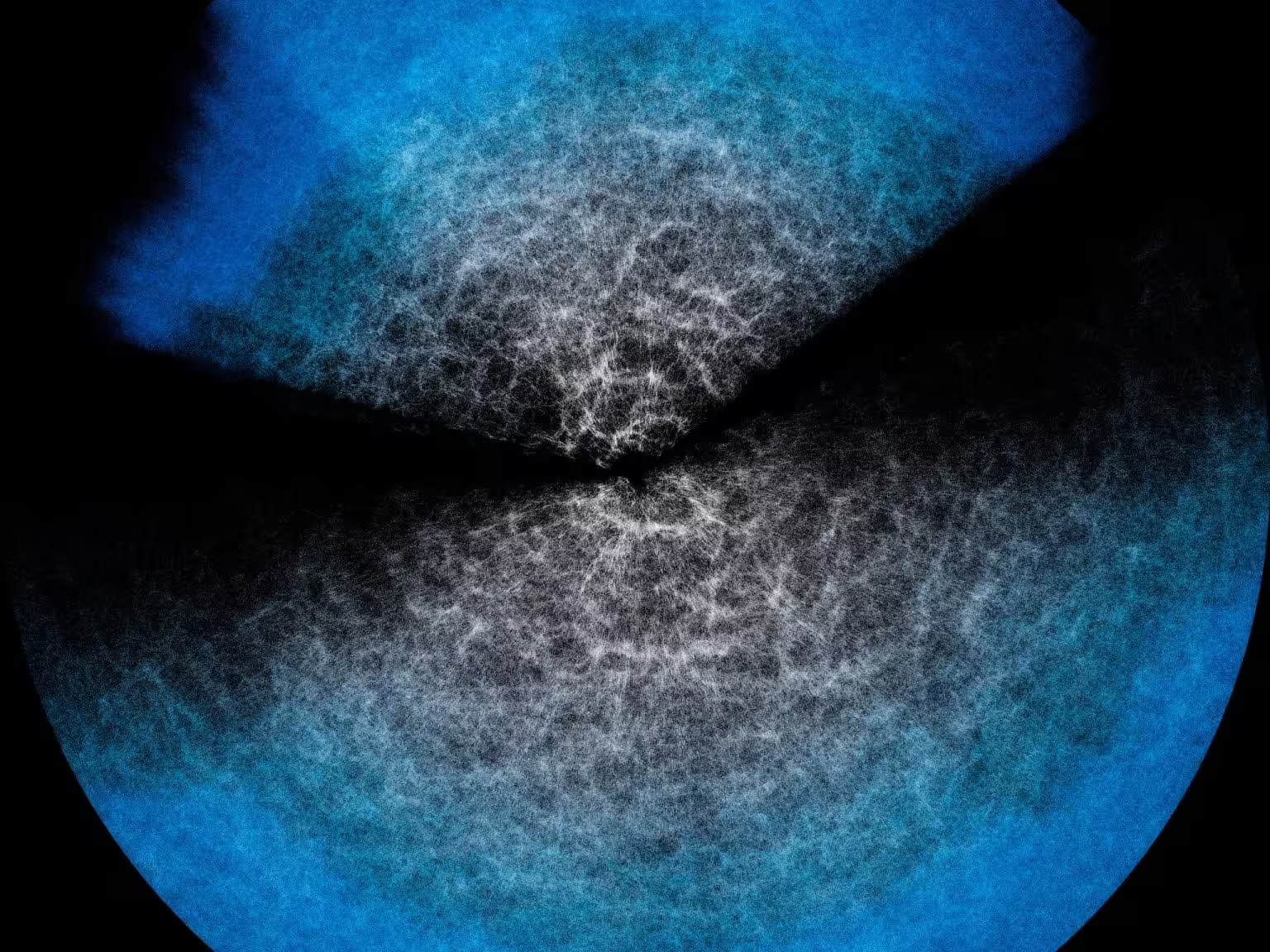

Modern cosmology links phenomena across enormous scales: large-scale structure in the Universe emerges from microscopic physical processes. Theoretical models encode that connection and produce statistical predictions for the observable patterns of galaxies, cosmic shear, and other probes. In practice, astronomers feed observational inputs into complex codes that compute expected signals — a process that can be computationally intensive and time-consuming.

As survey datasets grow, exhaustive evaluation of those theoretical models for every analysis becomes impractical. Current and upcoming projects such as the Dark Energy Spectroscopic Instrument (DESI), which has already released initial data, and ESA’s Euclid mission will produce orders of magnitude more measurements. Running full forward-model calculations repeatedly to explore parameter space or to perform likelihood analyses would demand prohibitively large compute resources.

How Effort.jl Accelerates Model Predictions

Emulators are a practical solution: surrogate models that reproduce the outputs of expensive codes far more quickly. Effort.jl is an emulator framework built around a neural network core that learns the mapping between input parameters (for example cosmological parameters, bias or nuisance parameters) and the model’s precomputed predictions.

Learning the model response

During training, the network is shown examples of parameter sets and the corresponding model outputs. After this training phase, the emulator can generalize and provide accurate predictions for new parameter combinations very rapidly. Importantly, the emulator does not derive or replace the underlying physics; it approximates the model's numerical response so analysts can obtain near-instant predictions for downstream tasks such as parameter estimation and forecast studies.

Built-in sensitivity and gradient information

Effort.jl’s innovation lies in reducing the required training data by injecting prior knowledge of how predictions change with parameters. The code incorporates gradient information — numerical derivatives that describe the direction and magnitude of change when a parameter is perturbed slightly. By learning from both model outputs and their gradients, Effort.jl avoids forcing the network to rediscover known sensitivities, which dramatically lowers the number of training examples and overall compute needed. That efficiency makes it possible to run accurate emulations on smaller machines and to iterate analyses more quickly.

Validation, Accuracy, and Scientific Impact

Any surrogate must be validated carefully before being trusted for scientific inference. The recent study validating Effort.jl shows strong agreement between emulator outputs and full model calculations across both simulated tests and real survey data. Where traditional model runs required simplifications or trimming of analysis to remain tractable, Effort.jl allowed researchers to include previously omitted components without incurring prohibitive compute costs.

That practical advantage matters for experiments like DESI and Euclid. Both projects aim to measure the large-scale distribution of matter with unprecedented precision to constrain dark energy, gravity, and cosmic inflation models. Emulators such as Effort.jl enable deeper, faster exploration of parameter space, robust uncertainty quantification, and the inclusion of more complete physical ingredients during inference.

Related technologies and future prospects

Effort.jl complements advances in high-performance computing, differentiable programming, and GPU acceleration. Combining gradient-informed emulators with automatic differentiation and modern hardware could further cut costs and increase accuracy. As survey volumes continue to grow, emulator frameworks will play a central role in delivering timely scientific results.

Expert Insight

Dr. Alessia Rossi, an astrophysicist familiar with cosmological inference tools, comments: 'Effort.jl represents a pragmatic step forward. By using gradients and domain knowledge, it reduces the training burden while maintaining fidelity to the underlying model. For large surveys, that means more complete analyses can be run without waiting weeks of compute time.' This kind of hybrid approach — combining physics-based models with machine learning surrogates — is likely to be a mainstay in upcoming data releases.

Conclusion

Effort.jl exemplifies how emulator techniques can bridge the gap between computationally expensive theoretical models and the data volumes expected from next-generation surveys. By leveraging gradient information and prior knowledge of model sensitivities, the emulator achieves accurate, resource-efficient predictions that match full-model outputs in validation tests. For projects such as DESI and Euclid, tools like Effort.jl will accelerate analysis cycles, enable the inclusion of richer model components, and help maximize the scientific return from vast cosmological datasets.

Source: scitechdaily

Leave a Comment