3 Minutes

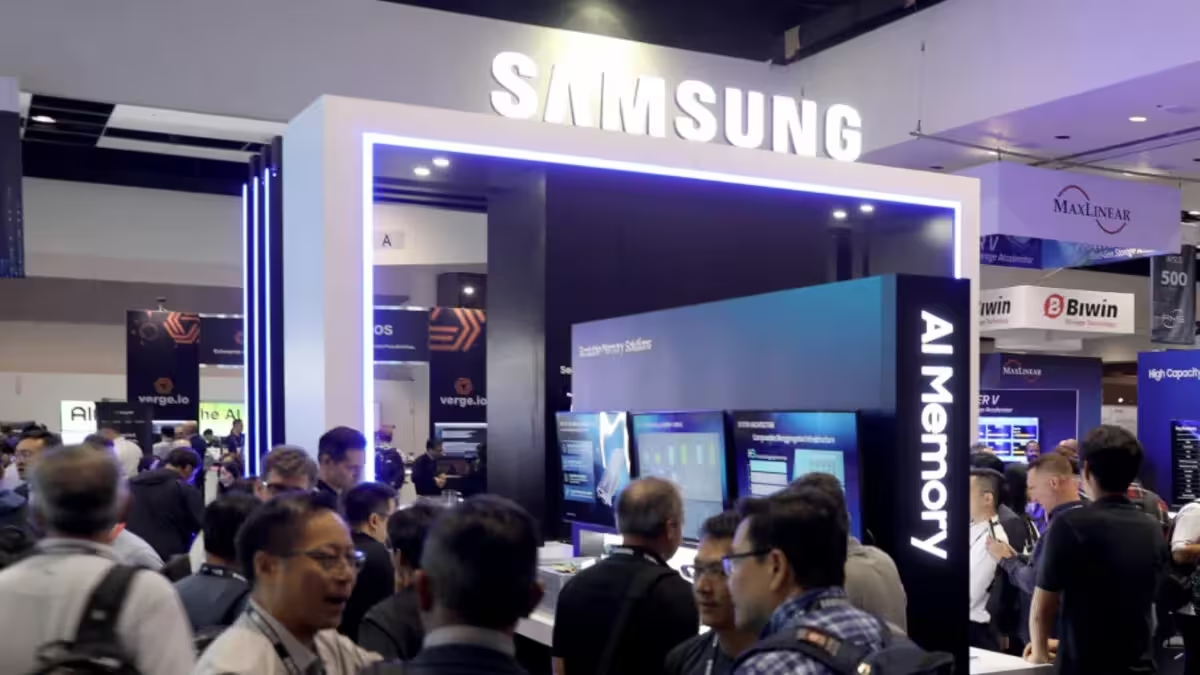

Samsung is moving fast to expand its high-bandwidth memory (HBM) output as AI demand surges. After years of development and product upgrades, the company now faces more orders than it can fulfill — and it plans to scale manufacturing to keep pace.

From early setbacks to market-leading HBM chips

GenAI chatbots and large-scale AI models that began capturing headlines in 2022 have driven explosive demand for AI accelerators. Those accelerators rely on HBM, a specialized memory designed for massive throughput. Samsung initially lagged behind competitors when some major customers — notably Nvidia — passed on its earlier HBM designs due to performance gaps. That changed after Samsung overhauled its memory division and improved its HBM technology.

Today, Samsung’s HBM3E and HBM4 are among the most sought-after modules in the market. Reports say both Broadcom and Nvidia regard Samsung’s HBM4 as top-tier, and enterprise customers are snapping up available supply.

Big capacity targets to meet booming AI demand

According to ET News, Samsung aims to boost HBM production capacity by roughly 50% by the end of 2026. The company currently manufactures about 170,000 HBM wafers per month — a figure on par with SK Hynix — and plans to push output toward 250,000 wafers monthly as it ramps up HBM4 production specifically.

- Current output: ~170,000 HBM wafers/month

- Target output: ~250,000 wafers/month by late 2026

- Main focus: scaling HBM4 capacity to satisfy AI accelerators

That expansion would help Samsung capture more of the premium HBM market and reduce the risk of lost revenue from unmet orders.

Where the growth will happen

The additional capacity will come through investments in Samsung’s Pyeongtaek 4 (P4) facility. Upgrading fabs and increasing wafer output are capital-intensive moves, but with HBM prices elevated and long-term AI demand promising, the company appears willing to invest heavily.

Imagine a future data center running next-generation LLM workloads — every improvement in memory bandwidth speeds up training and inference, which explains why chipmakers and cloud providers are racing to secure HBM supply now.

As AI models scale and more industries adopt GenAI tools, memory producers like Samsung are repositioning themselves not just to meet current demand, but to shape the market for years to come.

Source: sammobile

Leave a Comment