3 Minutes

DeepSeek Unveils DeepSeek-R1-0528 With Advanced Reasoning Capabilities

DeepSeek, the leading Chinese artificial intelligence (AI) company, has announced a substantial upgrade to its open-source large language model (LLM) lineup with the release of DeepSeek-R1-0528. The new model demonstrates significant performance gains in critical areas including mathematical reasoning, scientific problem-solving, and programming, bringing it closer to industry frontrunners like OpenAI’s o3 and Google’s Gemini 2.5 Pro.

Key Features and Technical Improvements

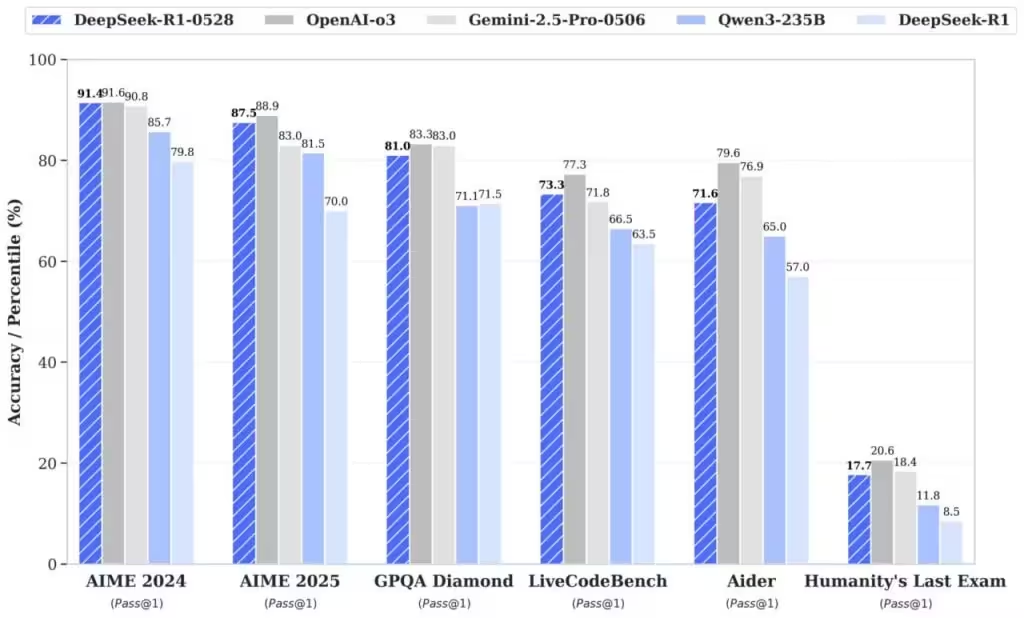

According to data shared on the Hugging Face platform, DeepSeek-R1-0528 benefits from increased computational resources and advanced post-training algorithmic optimizations. These upgrades have translated into remarkable improvements across key benchmarks: • AIME 2025 Math Test: Model accuracy has soared from 70% to an impressive 87.5%. • LiveCodeBench Programming Benchmark: DeepSeek-R1-0528 boosted its performance from 63.5% to 73.3%. • Humanity’s Last Exam: Accuracy jumped from 8.5% to 17.7% on this challenging assessment.

Performance Comparison: A New Challenger for OpenAI o3 and Gemini 2.5 Pro

These test results confirm DeepSeek-R1-0528 as a compelling competitor to market leaders o3 and Gemini 2.5 Pro, especially in domains where advanced reasoning and logic are essential. By delivering near state-of-the-art results in math and programming, DeepSeek is positioning itself as a major player in the global AI development landscape.

Compact Model Variant for Versatile Deployments

Alongside its flagship release, DeepSeek introduced a lighter version—DeepSeek-R1-0528-Qwen3-8B. Specially designed for deployment on lower-spec hardware, this 8-billion parameter model reportedly outperforms Qwen3-8B and equals the capabilities of Qwen3-235B-thinking, while requiring a minimum of just 16GB GPU memory for FP16 runs. This makes advanced AI technology accessible for developers and organizations with limited hardware resources.

Open-Source Commitment and Developer Accessibility

Continuing its dedication to open innovation, DeepSeek has released both models as open-source software under the MIT license, encouraging both research and commercial use. The complete models are available on Hugging Face, and supporting documentation can be found through GitHub and DeepSeek’s own API, making integration and scaling seamless for AI developers. Existing DeepSeek API users will automatically receive the upgrade to the newest version.

Community Reception and Market Impact

The release of DeepSeek-R1-0528 has generated considerable positive buzz across tech-focused social media platforms. Influencers within the developer community have praised its superior programming abilities and identified it as a serious challenger to OpenAI o3. As AI model deployment becomes more democratized, DeepSeek’s aggressive pace of innovation and open-source ethos are reshaping the competitive landscape.

Use Cases and Industry Relevance

With widened support for complex reasoning in mathematics, science, and code generation, DeepSeek-R1-0528 is ideally suited for educational technology, advanced research, data science applications, and enterprise-grade automation. Its high accuracy across diverse benchmarks makes it an attractive option for organizations seeking cutting-edge AI capabilities with maximum flexibility.

Source: venturebeat

Leave a Comment