11 Minutes

High-speed sensors convert artistic touch into measurable timbre control

High-speed sensing technology has delivered long-sought scientific proof that skilled pianists can change a piano's timbre—the perceived tonal color of a note—purely through fingertip movement. A collaborative research team led by Dr. Shinichi Furuya at the NeuroPiano Institute and Sony Computer Science Laboratories recorded keyboard motion with millisecond precision and showed that listeners reliably perceived the timbral intentions pianists aimed to produce. This work, published in Proceedings of the National Academy of Sciences (PNAS) on September 22, 2025, bridges a century-old artistic intuition and modern sensor science in a way that has implications for pedagogy, neuroscience, rehabilitation, and human–machine interfaces.

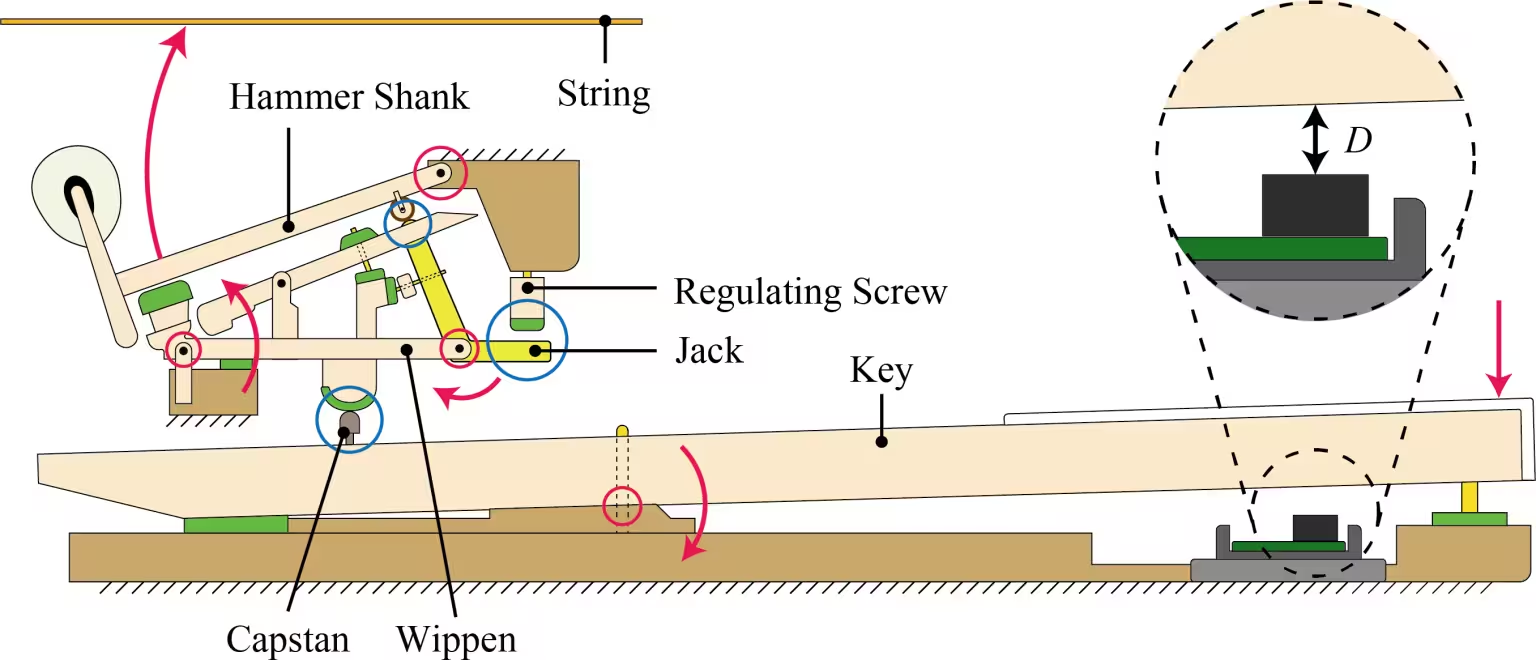

Figure 1: Piano Keyboard Action Mechanism and Non-Contact Sensor. The mechanism of the HackKey non-contact sensor, which measures piano keyboard movement at a temporal resolution of 1000 fps. It utilizes light reflection to measure the position of the underside of the key. Credit: NeuroPiano Institute

Scientific context: why timbre and touch matter

Timbre is the perceptual property that lets us distinguish two sounds that have the same pitch and loudness—for example, a violin and a flute playing the same note. In musical performance, timbre is central to expression. For bowed or wind instruments, the physical mechanisms that shape timbre (bow speed, embouchure) have been measured and taught for decades. By contrast, the piano has long been treated as mechanically fixed: press a key with a given force, and the resulting hammer–string interaction yields predictable harmonic content and loudness. Musicians and teachers have nevertheless described subtle tonal differences produced by touch—descriptions often framed as metaphors rather than measurable phenomena.

Early 20th-century debates in venues such as Nature raised the question of whether pianists can intentionally alter timbre through technique. Until now, objective, high-resolution data linking small variations in key motion to perceived timbral changes had not been available. That gap made it difficult to teach, quantify, or transfer the motor skills that underpin expressive piano playing. The new study addresses that absence by combining ultra-precise sensing, controlled listening tests, and statistical modeling to isolate the movement parameters that causally affect timbre perception.

Experiment and sensor system: measuring keystrokes at 1,000 fps

The core hardware used in the study is HackKey, a proprietary non-contact optical sensor array developed by the NeuroPiano Institute. HackKey monitors the underside of every key on an 88-key piano with temporal resolution of 1,000 frames per second (1 ms) and spatial precision down to 0.01 mm. Because the sensor is non-contact and based on reflected light, it captures small variations in key displacement, velocity, and acceleration without altering the instrument's acoustic properties.

Twenty internationally recognized pianists were recorded while asked to produce a set of expressive timbres (for example, bright vs. dark, light vs. heavy). The sensor data produced a high-dimensional description of each keystroke: onset velocity, acceleration during escapement (the brief moment when the action releases the hammer), timing overlap between adjacent notes, subtle deviations in hand synchronization, and other micro-movements.

In parallel, the acoustic output of the piano was recorded and normalized so that listeners could not rely on obvious cues like gross amplitude or tempo to make timbre judgments. Forty listeners—half professional pianists and half musically untrained participants—took part in psychophysical listening tests. They were presented with recorded excerpts and asked to report perceived timbral qualities and choose which performance matched the pianist's stated intent.

Key discoveries: specific movement features drive perceived timbre

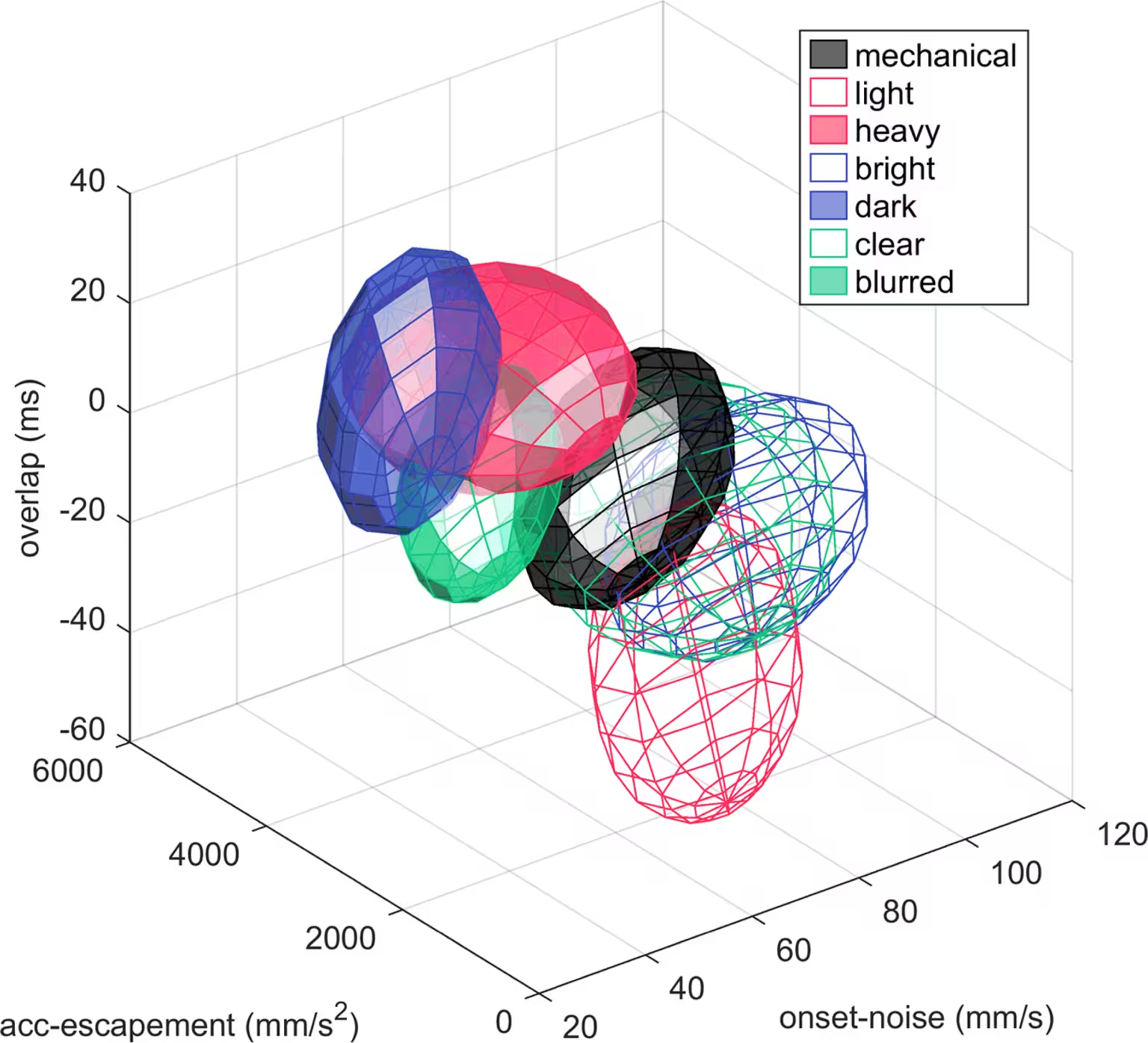

The listening tests revealed that listeners could reliably identify the timbral intentions of performers. Crucially, the discrimination persisted even when controlling for loudness and tempo. Data analysis using linear mixed-effects models found that a relatively small subset of movement features explained most of the perceptual variance. Among the most influential features were:

- Acceleration during escapement (acc-escapement): a brief burst of acceleration as the key and action pass through the escapement mechanism.

- Initial key-onset velocity and related onset noise metrics (onset-noise).

- Temporal overlap between consecutive keystrokes (overlap), which affects perceived legato or separation.

- Small asynchronies between hands (hand-synchronization deviation).

Figure 2: Separation of Timbres by Key Movement Features. Timbres are separated based on key movement feature values. Different colors represent different timbres. Key movement feature values include acceleration when key escapement is reached (acc-escapement), initial velocity when the key is struck (onset-noise), and temporal overlap of consecutive keystrokes (overlap).Different timbres are grouped at distinct positions within this space. Credit: NeuroPiano Institute

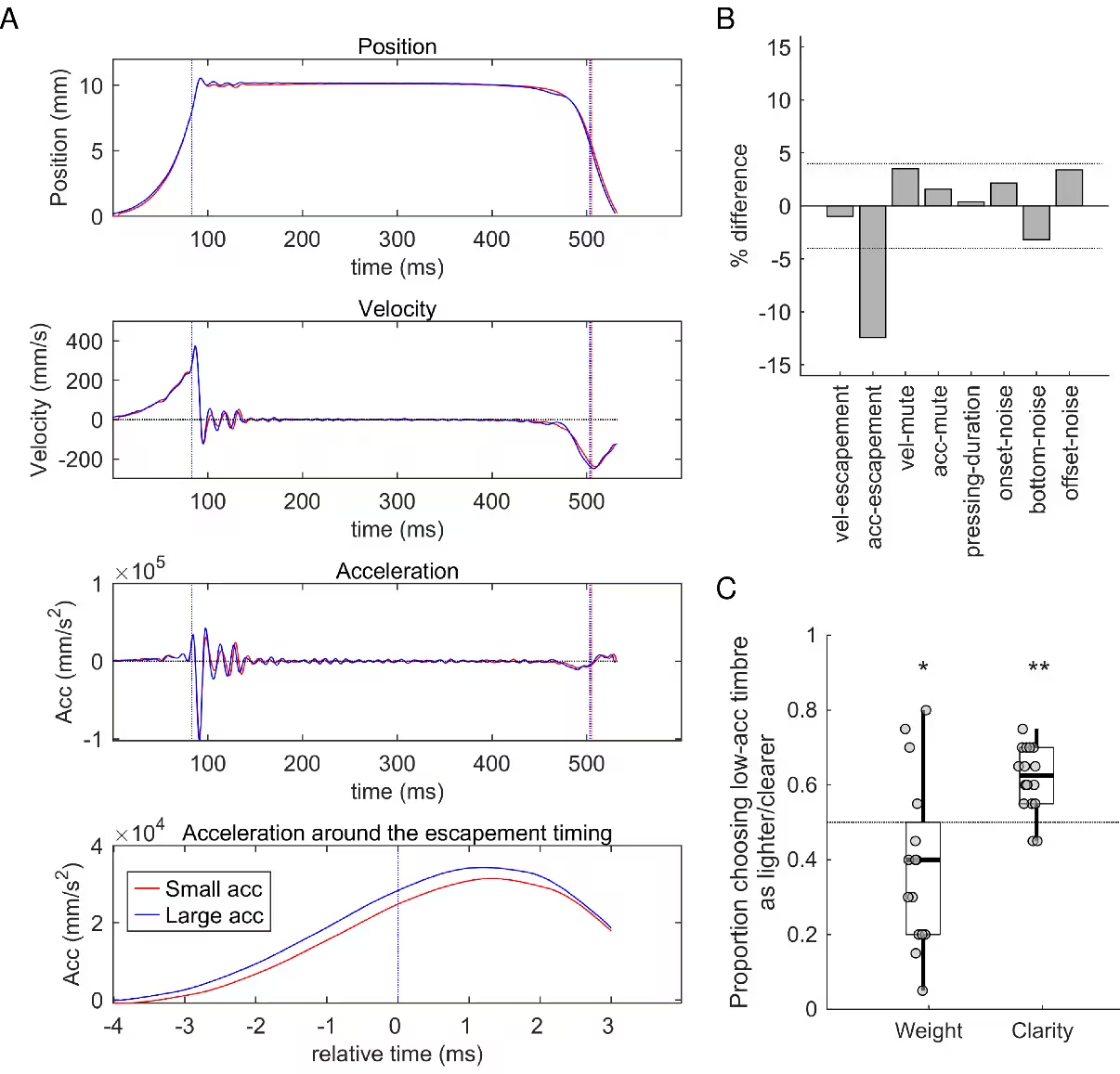

To move beyond correlation, the researchers conducted controlled trials in which only a single movement parameter was varied while all other measured features were held nearly constant (differences below 5%). When listeners compared pairs of notes differing in just one movement dimension—most notably acceleration during escapement—they consistently reported different timbral impressions such as "heavier" or "clearer." Those psychophysical results were strong empirical evidence of a causal link between micro-movements at the keyboard and perceived piano timbre.

Figure 3: Keystrokes That Differ Only in Specific Movement Feature Values Produce Different Timbre Perceptions. (A) Among all key movement features, keystrokes that differ only in acceleration during escapement passage. (B) Keystrokes that differ only in acceleration during escapement passage, with differences in other key movement feature values below 5%.(C) Results of psychophysical experiments (listening tests) demonstrating that this difference in acceleration alters timbral perceptions such as weight and clarity. Credit: NeuroPiano Institute

Implications for music education, neuroscience, and technology

Quantifying how subtle motor control maps to high-level perceptual outcomes transforms an element of "tacit knowledge" in artistic training into explicit, teachable information. For piano pedagogy, the study suggests several near-term applications:

- Objective practice tools that display the movement features linked to desired timbres, enabling targeted motor learning and faster acquisition of expressive techniques.

- Evidence-based caution against developing maladaptive gestures: sensor feedback could highlight unhealthy motion patterns that increase injury risk.

- Personalized practice recommendations and recommendation systems that suggest specific kinematic targets (e.g., launch acceleration profiles) to achieve a given tonal goal.

Beyond education, the findings illuminate how the brain integrates motor commands and sensory feedback to form aesthetic judgments. The observation that the same acoustic energy can be perceived differently depending on minute differences in how it was produced points to multisensory and motor-sensory integration processes underlying higher-order perception. Such insights can inform rehabilitation strategies that rely on retraining fine motor skills in contexts ranging from post-stroke therapy to occupational therapy for surgeons and craftsmen.

In engineering and human–machine interface design, precise mappings between intentional micro-movements and perceptual output could enable more expressive digital instruments and co-creative systems. For example, a digital piano or piano-to-synthesis interface that reproduces or exaggerates movement-linked timbre cues could allow performers to shape tone more deliberately in electronic contexts.

Research context and funding

This work was supported by flagship Japanese initiatives focused on advanced basic research and transformational technologies. Funding and programmatic context include:

- JST Strategic Basic Research Program (CREST), Research Area: Core Technologies for Trusted Quality AI Systems, Research Theme: Building a Trusted Explorable Recommendation Foundation Technology (Research Period: Oct 2020–Mar 2026).

- Moonshot Research & Development Program, Research Area: Realization of a society in which human beings can be free from limitations of body, brain, space, and time by 2050, Research Theme: Liberation from Biological Limitations via Physical, Cognitive and Perceptual Augmentation (Research Period: Oct 2020–Mar 2026).

The cooperative environment between neuroscience, computer science (Sony CSL), and music performance research (NeuroPiano Institute) enabled the integration of precision hardware, psychophysics, and advanced statistical modeling required for these findings.

Expert Insight

"This study converts something musicians have long relied on as intuition into actionable science," says Dr. Elena Martens, a neuroscientist and motor-control specialist at the University of Amsterdam (fictional comment for context). "By showing that a limited set of kinematic features can reliably alter timbre perception, the team has opened a pathway to objective training tools and to research that connects motor learning with esthetic experience. The implications extend from conservatory practice rooms to neurorehabilitation clinics and digital instrument design."

Methodological strengths and limitations

Strengths of the study include the combination of extremely high temporal and spatial resolution measurements with carefully controlled listening experiments. The use of mixed-effects statistical models allowed the team to account for variability across performers and listeners while isolating consistent movement–perception relationships.

Limitations and areas for future work include:

- Generalizability across instruments and rooms: this research focused on a particular acoustic piano and recorded conditions. Replication across different piano models, actions, and acoustic environments will clarify how universal the identified movement–timbre mappings are.

- Long-term learning: while the study demonstrates that movement features causally influence timbre perception, longitudinal studies are needed to determine how novices can learn these motor patterns and how durable that learning is.

- Neural mechanisms: connecting these kinematic features to specific neural circuits for motor planning and multisensory integration is an open line of interdisciplinary inquiry.

Future prospects: education, performance, and technology

Several practical developments are foreseeable in the next five to ten years:

- Sensor-enabled practice systems: affordable versions of non-contact key tracking could be integrated into digital pianos and acoustic instruments to provide real-time feedback on movement features tied to timbre.

- Augmented instruments and synthesis models: synthesizers could incorporate movement-based control laws that map measured keystroke micro-dynamics directly to timbral parameters, allowing electronic keyboards to preserve the expressive palette of acoustic performance.

- Clinical applications: rehabilitation programs that retrain fine motor control could use timbre-linked feedback to motivate and measure progress in patients recovering dexterity.

More broadly, the study exemplifies how precise measurement technologies—originally developed for engineering and robotics—can illuminate human creativity. The crossover between high-performance sensing, machine learning, and the arts heralds a new era in which expressive skills are both better understood and better taught.

Conclusion

The NeuroPiano Institute and Sony CSL study provides the first robust, experimentally confirmed demonstration that pianists can shape piano timbre through controlled fingertip movements. By linking a constrained set of kinematic features to consistent perceptual outcomes, the research converts artistic tacit knowledge into quantifiable data that can inform pedagogy, neuroscience, clinical practice, and instrument design. The work opens routes to evidence-based educational tools, new interfaces for musical expression, and interdisciplinary studies of how motor control and perception combine to produce aesthetic experience.

Keywords embedded in this article: piano timbre, tactile control, high-speed sensors, HackKey, NeuroPiano Institute, piano technique, motor control, music education, PNAS, sensor technology, escapement acceleration.

Source: scitechdaily

Leave a Comment