3 Minutes

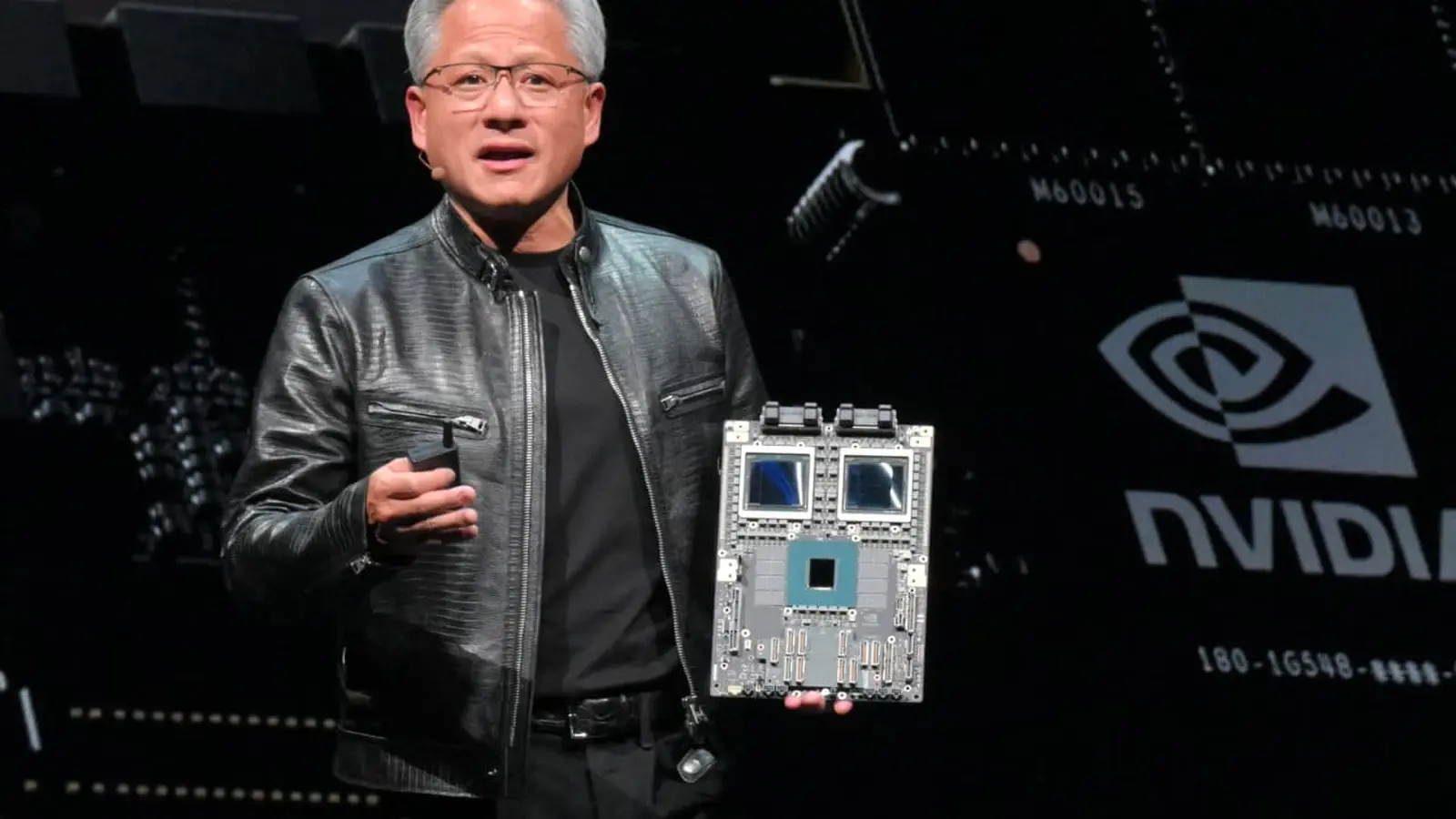

At GTC 2025 NVIDIA gave the first public look at the Vera Rubin Superchip — two gargantuan GPUs stacked with a Vera CPU and plenty of LPDDR at the edges. Now, reports say Rubin has moved from lab demos to production lines, and NVIDIA has secured HBM4 memory samples from every major DRAM supplier.

From demo to assembly line: what changed

During a recent visit to TSMC, CEO Jensen Huang reportedly confirmed that Rubin GPUs have been spotted on the production line. That follows NVIDIA's earlier announcement that lab samples had arrived — an unusually fast jump from prototype to production visibility. If true, it accelerates the timetable for Rubin as the next big AI accelerator aimed at hyperscale data centers.

Supply chain moves: TSMC steps up, HBM4 arrives

Demand for NVIDIA's Blackwell family remains intense, and TSMC has been working to keep pace. The foundry reportedly boosted its 3nm capacity by roughly 50% to support NVIDIA's orders. TSMC president C.C. Wei confirmed NVIDIA is asking for significantly more wafers, though he called exact figures "secret."

On the memory side, NVIDIA has reportedly obtained HBM4 samples from all the major DRAM manufacturers. HBM4 is expected to provide the high-bandwidth, low-latency memory Rubin will need for large AI models — and sourcing from multiple vendors helps NVIDIA hedge against shortages.

Timing, mass production and what to expect

NVIDIA has said Rubin could enter mass production around Q3 2026 or potentially earlier. It’s important to distinguish early risk-production runs from full-scale mass production: samples and pilot lines validate silicon and packaging, while mass production signals the start of volume shipments to cloud providers and OEMs.

Rubin is already tied to big commercial bets. NVIDIA’s roadmap and a reported multi-billion dollar partnership with OpenAI underscore how the new accelerators could power the next wave of large-scale AI deployments.

Why this matters for AI infrastructure

Imagine data centers scaling up with Rubin-class accelerators and HBM4 memory: higher throughput, reduced training time, and denser inference clusters. For cloud providers, chipmakers, and AI labs, Rubin’s move into production — paired with TSMC’s capacity push and multi-supplier HBM4 sourcing — lowers the risk of bottlenecks as model sizes keep growing.

Whether Rubin arrives to market on an accelerated timetable or follows a more conservative rollout will shape competition across GPUs, CPUs, and custom accelerators. For now, the story is one of tight demand, aggressive foundry support, and memory suppliers lining up to feed the next generation of AI hardware.

Source: wccftech

Leave a Comment