5 Minutes

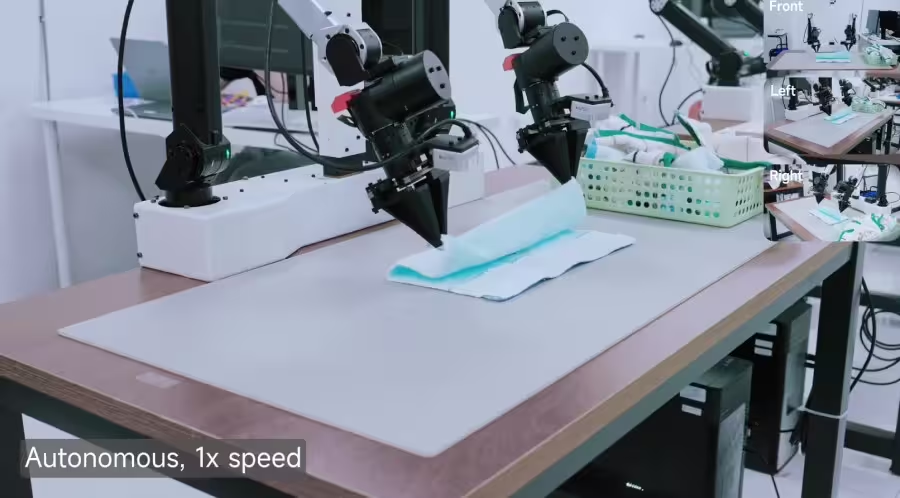

A towel folded like it had been handled by a careful human. Blocks disassembled with steady hands. Small feats, perhaps, but telling ones. Xiaomi's new Robotics-0 model isn't a flashy consumer gadget; it's an attempt to teach machines how to see, understand, and move with the kind of finesse we've long blamed on human intuition.

At its heart Robotics-0 tries to close the loop that defines any capable robot: perception, decision, execution. The company frames this as “physical intelligence” — a terse phrase that hides a stack of difficult problems. How do you keep a system sharp at language and image reasoning while also training it to make millimeter-perfect motions? Xiaomi's answer is an architecture that splits the thinking from the moving.

One side is a Visual Language Model — think of it as the robot's interpreter. It digests high-resolution camera feeds and human instructions, even the vague ones: “Please fold the towel.” It handles object detection, spatial relationships, visual Q&A, and the kind of commonsense reasoning that turns pixels into tasks. The other side is the Action Expert: a Diffusion Transformer designed not to spit out a single motor command but to produce an “Action Chunk” — a short sequence of coordinated movements. In practice this means smoother transitions and fewer jerky corrections.

The engineering choice behind this split is called a Mixture-of-Transformers architecture. Responsibilities are parceled out rather than forced into one monolithic model. That helps address a notorious problem: once you train a vision-language model to act, it often loses some of its reasoning edge. To avoid that, Xiaomi co-trains on multimodal data and action trajectories so the model keeps its head while learning to move its hands.

Training is a staged affair. First comes an Action Proposal step where the visual model predicts distributions over plausible actions while it reads an image. That aligns internal representations of seeing and doing. Then the visual part is frozen. The Diffusion Transformer trains to denoise action sequences — turning noisy guesses into executable motion — guided by key-value features rather than discrete language tokens.

Real robots also reveal practical frictions. Latency, for one. If the model pauses to think, the robot often freezes or wobbles. Xiaomi addresses that with asynchronous inference: computation and hardware run semi-independently so motion stays continuous even when the model is still calculating. They also feed earlier predicted actions back into the system — a “Clean Action Prefix” — which helps damp jitter and maintain momentum. An attention mask shaped like a lambda (Λ) nudges the system to favor current visual cues over stale history, improving responsiveness to sudden changes.

Benchmarks tell part of the story. Xiaomi reports top results across LIBERO, CALVIN, and SimplerEnv simulations, outperforming roughly 30 peer systems. Numbers matter, but so do real-world tests. On a dual-arm platform, Robotics-0 handled long-horizon tasks such as towel folding and block disassembly, showing steady hand-eye coordination and handling both rigid and flexible objects without glaring failure modes.

There’s another practical point: Xiaomi is releasing Robotics-0 as open source. That matters for research velocity. When teams can inspect code, replicate experiments, and build on each other's work, progress accelerates. Expect follow-up papers, forks, and, likely, rapid iterations applying the same VLA (vision-language-action) ideas to different hardware.

Robotics-0 doesn't solve every problem. Soft-object manipulation, generalization to wildly different environments, and full autonomy remain open challenges. But the model indicates a pragmatic direction: keep perception and action tightly aligned without forcing one to cannibalize the other. It's a reminder that progress can come from architecture choices as much as from bigger models.

If you care about where robots move next, watch for how this model behaves outside Xiaomi's labs and which parts the community keeps and refines. The next time a household bot folds your towel, you might glimpse the fingerprints of Robotics-0 in every smooth fold.

Source: gizmochina

Leave a Comment