3 Minutes

New research from the University of Pennsylvania suggests that artificial intelligence can extract personality cues from photos and use them to predict job outcomes. The finding raises questions about hiring automation, lending decisions, and the ethics of using facial analysis as a signal of employability.

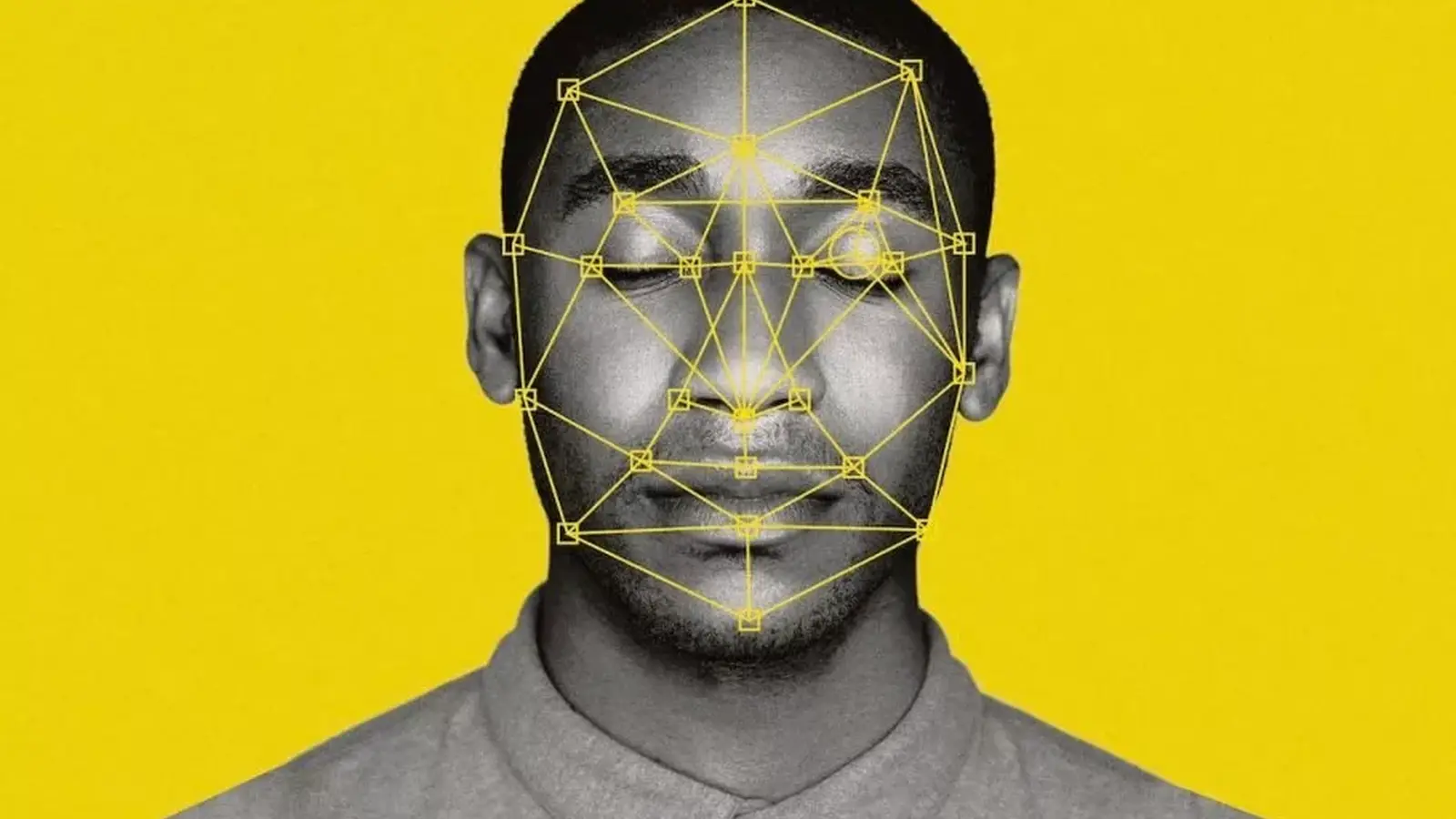

How the study worked — faces, algorithms, and the Big Five

The team trained a machine‑learning model on previous research that linked facial appearance to personality. They collected profile photos of 96,000 MBA graduates from LinkedIn and used an AI system to estimate the five major personality traits — openness, conscientiousness, extraversion, agreeableness and neuroticism (the “Big Five”).

From pixels to personality scores

The AI scanned facial features and produced trait estimates, which the researchers then compared with real-world career metrics including income and other labor‑market outcomes. The analysis found statistically significant correlations: facially inferred extraversion was the strongest positive predictor of higher salary, while inferred openness was associated with lower earnings in this sample.

Why this matters: hiring, lending and algorithmic fairness

Imagine automated screening systems that add a facial‑analysis layer to résumés and interviews. According to coverage in The Economist and the paper published on SSRN, companies driven by financial incentives might adopt such tools to refine hiring, rental or credit decisions. That prospect is jarring for many: rejecting candidates because an algorithm predicts “undesirable” personality traits from their face risks entrenching bias and violating anti‑discrimination norms.

The study’s authors emphasize caution. They describe the model’s outputs as an additional information source — not definitive proof of a person’s character or future. The field of facial analysis for behavioral traits is still young, and accuracy varies across populations and contexts. Mistakes can amplify social inequities if employers, banks or landlords treat algorithmic inferences as decisive.

Possible benefits, real risks and unintended consequences

Proponents argue there may be scenarios where facial analysis helps in the absence of other data — for instance, offering credit to people with limited financial histories. Yet the paper warns about long‑term behavioral effects: widespread use of facial‑analysis tools could push people to modify their appearance digitally or even through cosmetic procedures to game automated systems.

There are also legal and technical challenges. Facial recognition and trait inference tools intersect with privacy laws, anti‑discrimination statutes and emerging regulations on automated decision systems. Without robust transparency, auditing and fairness safeguards, deployment could cause harm at scale.

What to watch next

Researchers are continuing to test whether facially inferred signals carry predictive value across different job markets and demographic groups. Independent replication, open datasets, and public policy debate will be essential to determine legitimate uses — if any — of AI personality inference in hiring or credit underwriting.

For now, the study is a reminder that machine learning can find patterns humans might miss — but pattern ≠ proof. Policymakers, employers and technologists must weigh potential utility against risks to fairness, privacy and individual autonomy.

Leave a Comment