8 Minutes

Researchers at Northwestern University have built a soft, wireless implant that communicates with the brain using patterned flashes of light. By projecting programmable micro-LED sequences across the skull, the device teaches animals to treat those light patterns as new, meaningful signals that guide decisions and behavior.

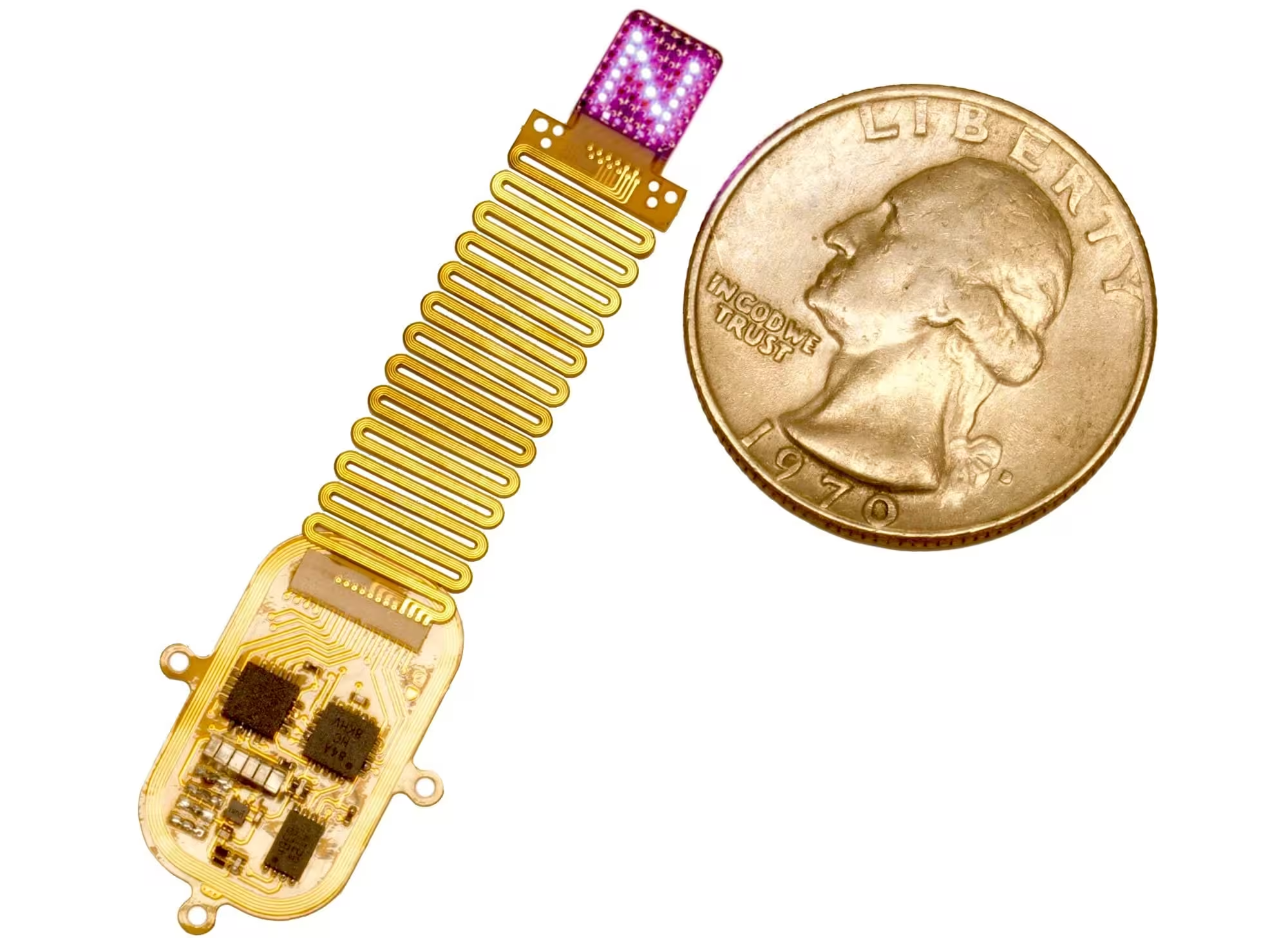

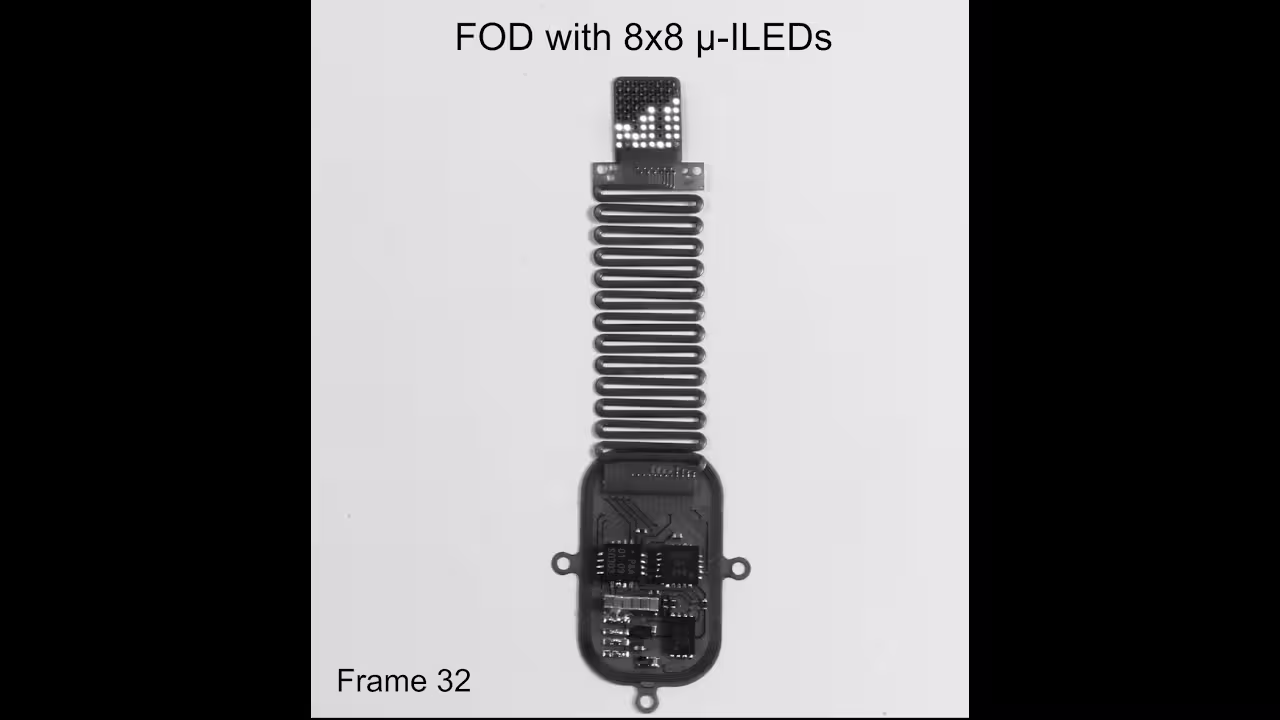

The thin, flexible, wireless device sits next to a quarter for scale. Device emits complex patterns of light (shown here as an “N”) to transmit information directly to the brain.

How light becomes a new sense: the device and the idea

Optogenetics — the technique of making neurons respond to light — has transformed neuroscience, but until recently it depended on rigid fiber optics and bulky hardware that limited natural behavior. This new implant reimagines that concept. It is a soft, conformable array of micro-LEDs that lies under the scalp and rests on the skull, wirelessly powered and programmable in real time. Instead of delivering light through implanted probes, the system sends patterned red light through bone to activate genetically sensitized cortical neurons across broad regions.

“We designed a system that can produce the complex, distributed patterns the brain expects during real sensory experiences,” said John A. Rogers, whose lab developed the hardware. The array—compact as a postage stamp and thinner than a credit card—contains up to 64 individually addressable micro-LEDs, each smaller than a human hair. Software controls frequency, intensity and temporal sequences, creating a near-infinite vocabulary of light codes that the brain can learn to interpret.

From flashes to behavior: training animals on artificial codes

In lab trials, mice genetically engineered to express light-sensitive channels in cortical neurons were trained to recognize specific light sequences. The implant delivered bursts across four cortical regions, forming a distinct pattern that functioned like a coded message. Animals learned to associate that pattern with a reward and reliably navigated to the correct reward port when they detected the target sequence.

“They can’t tell us what they 'feel,' so their choices reveal comprehension,” said neurobiologist Yevgenia Kozorovitskiy. Repeated trials showed that mice could distinguish the target pattern from many distractors, demonstrating that brains can treat these artificial, wired-less inputs as meaningful information—effectively reading light as a new sensory channel.

In a demonstration, the engineers programmed the device to display patterns of light in the sequence of a Tetris game. These complex patterns of light send information directly to the brain — bypassing the body’s natural sensory pathways. In a demonstration, the engineers programmed the device to display patterns of light in the sequence of a Tetris game.

Why this matters: implications for neural interfaces and neuroprosthetics

This advance is more than a laboratory curiosity. If patterned cortical stimulation can be reliably learned and used, it opens multiple pathways for clinical and technological applications:

- Neuroprosthetic feedback: Prosthetic limbs require sensory return to feel natural. Patterned light codes could deliver artificial touch or proprioception signals directly to cortex.

- Restoring lost senses: For patients with severe sensory pathway damage, cortical light stimulation may provide alternate channels for auditory or visual information.

- Pain management and rehabilitation: Non-pharmacological modulation of cortical circuits could aid pain control, plasticity after stroke, or motor recovery.

- Brain-computer interfaces (BCIs): Wireless, multichannel stimulation expands what BCIs can send to the brain—creating richer, bidirectional communication between machines and neural tissue.

Scientific background: building on optogenetics and soft bioelectronics

The new device is the next stage of a trajectory that combined optogenetics with advanced materials. Early optogenetic experiments used tethered fiber optics that constrained movement. In 2021, the teams at Northwestern introduced a fully implantable, battery-free device capable of single-site light control. The current array multiplies that capability: hundreds or thousands of distinct spatiotemporal patterns are now feasible by combining micro-LEDs across cortical areas.

Because natural perception engages distributed networks rather than isolated points, the researchers emphasized patterned stimulation that mimics real-world cortical dynamics. Red wavelengths were chosen because they penetrate skull and tissue more effectively, allowing stimulation from the skull surface rather than deep insertion of probes. That reduces invasiveness while preserving broad cortical coverage.

Technical and ethical considerations

Key technical hurdles remain. The current work relies on animals whose neurons are genetically sensitized to light; translating the approach to humans would require alternative strategies for making neurons responsive, along with rigorous safety testing of light dosimetry, heating effects and long-term biocompatibility. Wireless power and real-time programmability already reduce infection and tether risks, but chronic implantation will demand careful device encapsulation and materials that withstand years of cortical motion.

Ethical questions are equally important. Delivering arbitrary signals to the brain raises issues about agency, consent, and unintended changes in perception or behavior. Any clinical rollout must balance potential benefits—restored sensation, reduced pain, better prosthetic function—against risks and ensure strong regulatory and ethical oversight.

What the experiments showed: key discoveries

The study, published December 8 in Nature Neuroscience, demonstrated several concrete results:

- Mice learned to interpret patterned micro-LED stimulation through the skull as distinct informational signals and used them to guide behavior.

- An arrayed, programmable 64-micro-LED system produced more complex cortical activation patterns than single-site stimulation, increasing the potential information bandwidth.

- The soft, subscalp design achieved stimulation without observable interference in natural behaviors—an advantage for more naturalistic studies.

Next steps: scaling patterns, coverage and wavelengths

Researchers plan to probe how many distinct patterns a brain can reliably learn and retain, expand LED counts and cortical coverage, shrink spacing between LEDs for finer spatial resolution, and test additional wavelengths to reach deeper brain structures. They will also explore algorithms for encoding sensory-like information into light sequences that the cortex can interpret quickly and accurately.

Expert Insight

“This work challenges traditional boundaries between sensation and stimulation,” says Dr. Laila Gomez, a fictional neural engineering specialist. “If the brain can learn to decode arbitrary patterns, it changes how we think about restoring functions: you don't always need to repair a broken pathway—you can provide an alternate channel that the brain will learn to use. Of course, translating this to humans will require new molecular tools or complementary electrical approaches to make neurons responsive and safe long-term.”

Broader context: how this links to brain-computer interfaces

Contemporary BCIs typically focus on reading neural signals—decoding intention to control cursors, robots, or prosthetic limbs. This research pushes the other direction: writing structured messages into cortex. Combining writing and reading promises bidirectional BCIs that both sense intent and supply relevant feedback, closing the loop in a way that could make assistive devices feel more natural and effective.

Real-world scenarios

Imagine a person controlling a robotic arm via neural signals while receiving patterned cortical feedback that conveys touch location and pressure. Or think of a cochlear implant analogue that bypasses damaged auditory nerves and delivers information as cortical light codes interpretable by the brain. Those scenarios are distant but now more conceptually grounded thanks to this work.

What researchers said

Yevgenia Kozorovitskiy described the platform as “a way to tap directly into how electrical activity becomes experience.” John A. Rogers emphasized the design challenge of delivering patterned stimulation in a minimally invasive, fully implantable format. Postdoctoral researcher Mingzheng Wu noted that moving from a single micro-LED to a 64-element array dramatically increases the combinatorial space of possible patterns—frequency, intensity and timing—to create rich, interpretable codes.

As the field advances, collaborative efforts across neuroscience, materials science, ethics, and clinical medicine will be essential to translate patterned cortical stimulation from mice to meaningful human therapies.

Source: scitechdaily

Comments

bioNix

Promising tech but feels overhyped. Training animals to read light codes ok, but scaling to human cortex, nontrivial. still, neat demo. needs ethics.

Tomas

Is this even safe long term? The genetics piece is huge, humans arent mice. heating, immune stuff, consent , who decides? sounds risky, curious though.

atomwave

wow this feels like sci fi but real… if that’s legit, imagine prosthetics with actual touch. kinda thrilling and kinda scary, ngl

Leave a Comment